The block checks whether its sprite is touching a specified color. If it is, the block returns “true”.

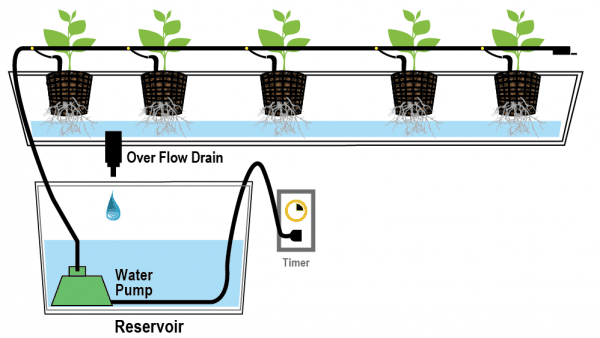

This project demonstrates how to use Machine Learning Environment to make a machine–learning model that identifies the hand gestures and makes the Mars Rover move accordingly.

This project demonstrates how to use Machine Learning Environment to make a machine–learning model that identifies hand gestures and makes the Mars Rover move accordingly.

We are going to use the Hand Classifier of the Machine Learning Environment. The model works by analyzing your hand position with the help of 21 data points.

Hand Gesture Classifier Workflow

Follow the steps below:

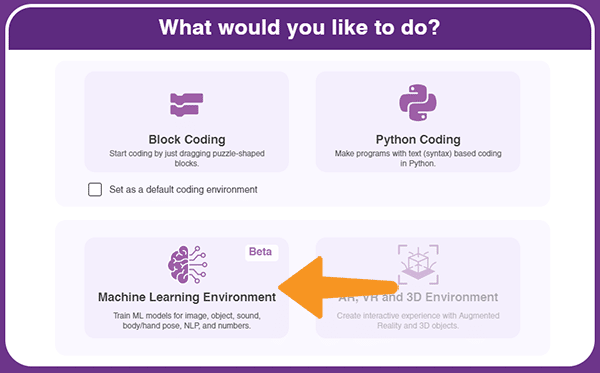

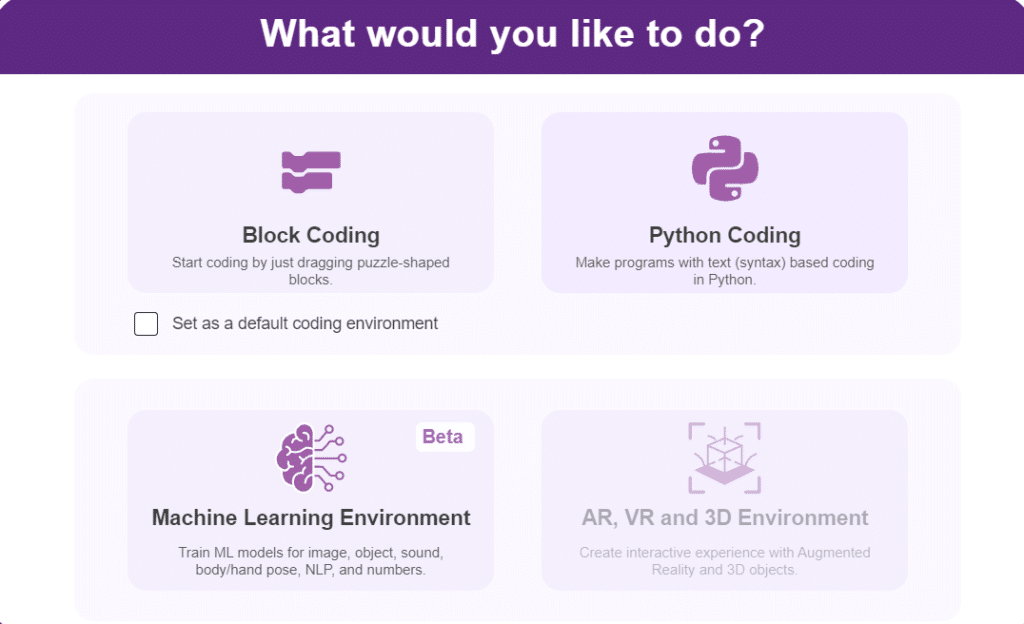

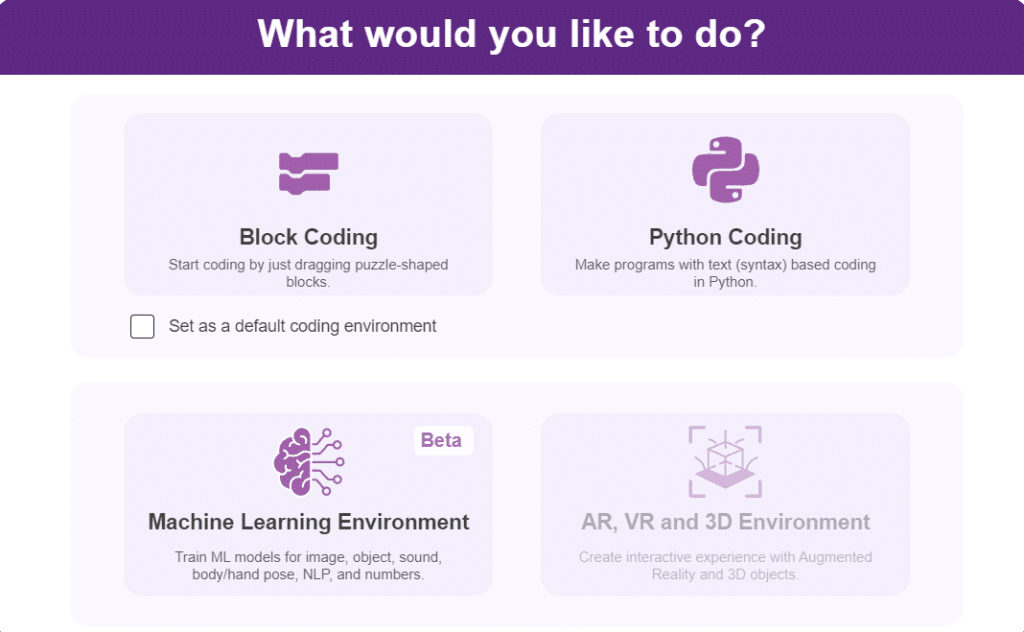

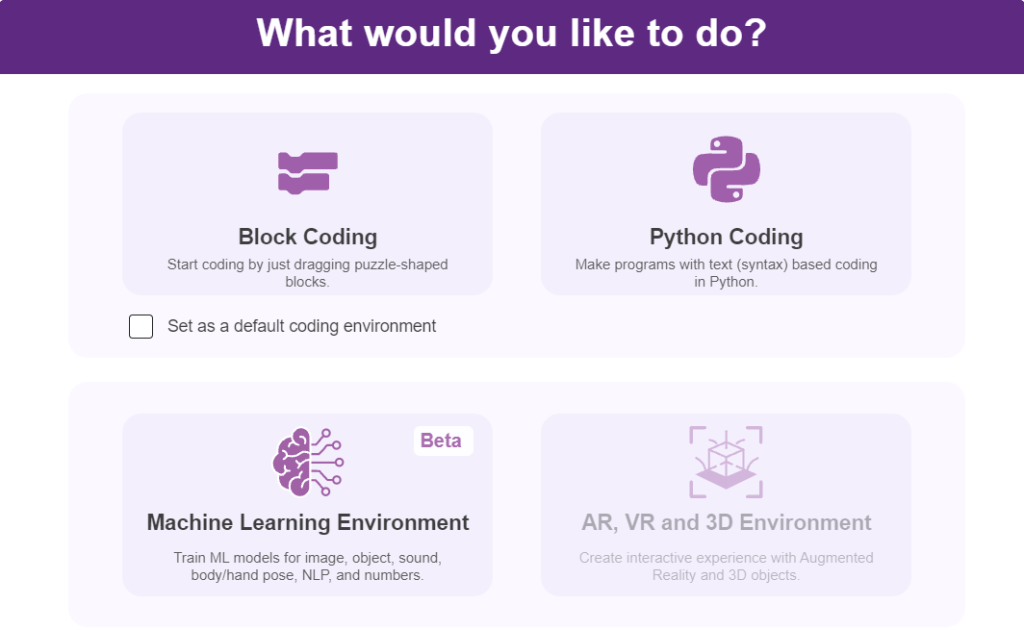

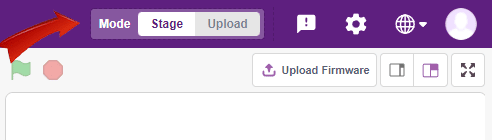

- Open PictoBlox and create a new file.

- Select the coding environment as appropriate Coding Environment.

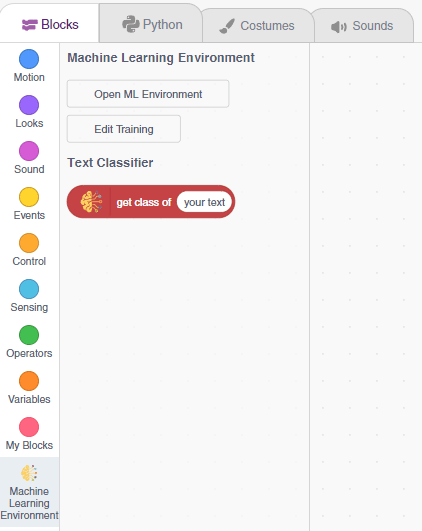

- Select the “Open ML Environment” option under the “Files” tab to access the ML Environment.

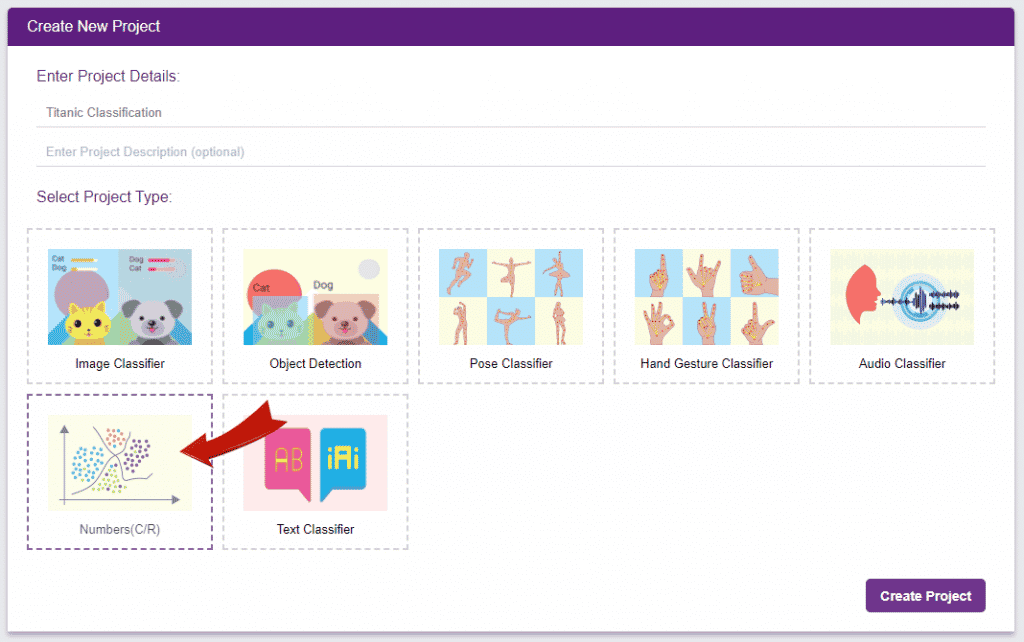

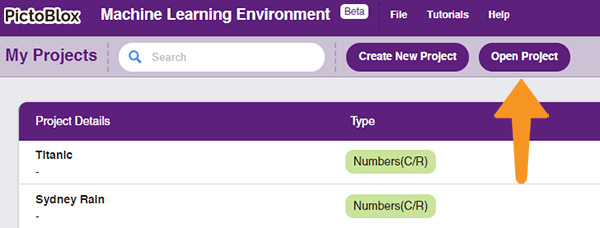

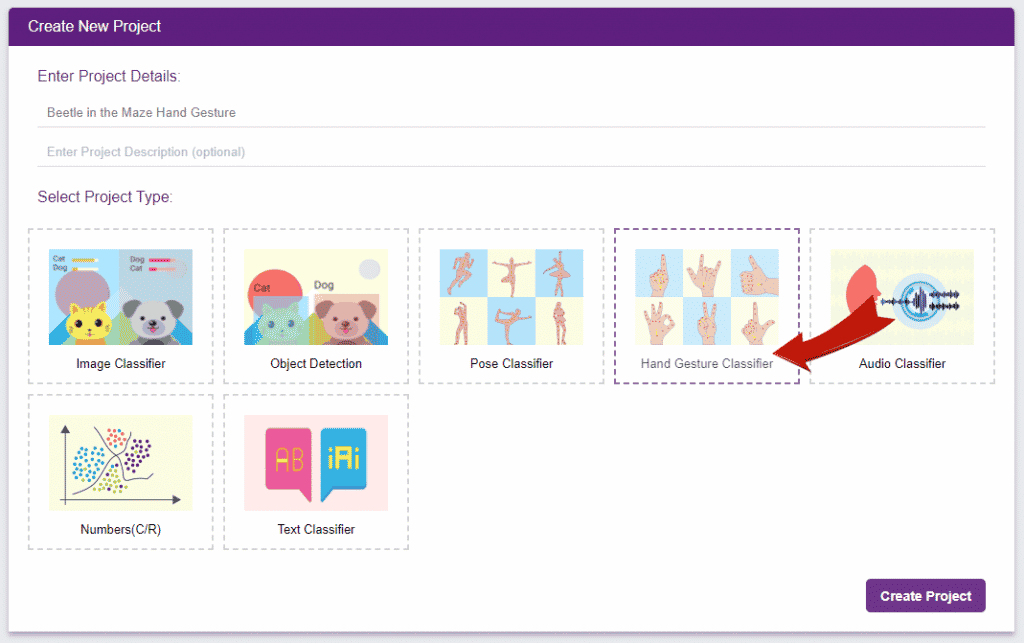

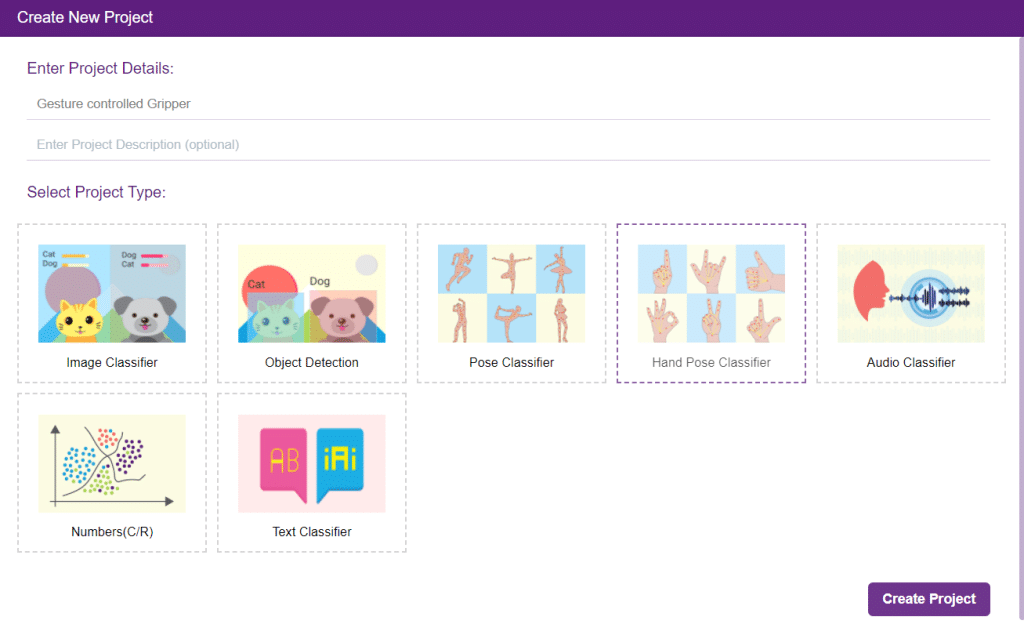

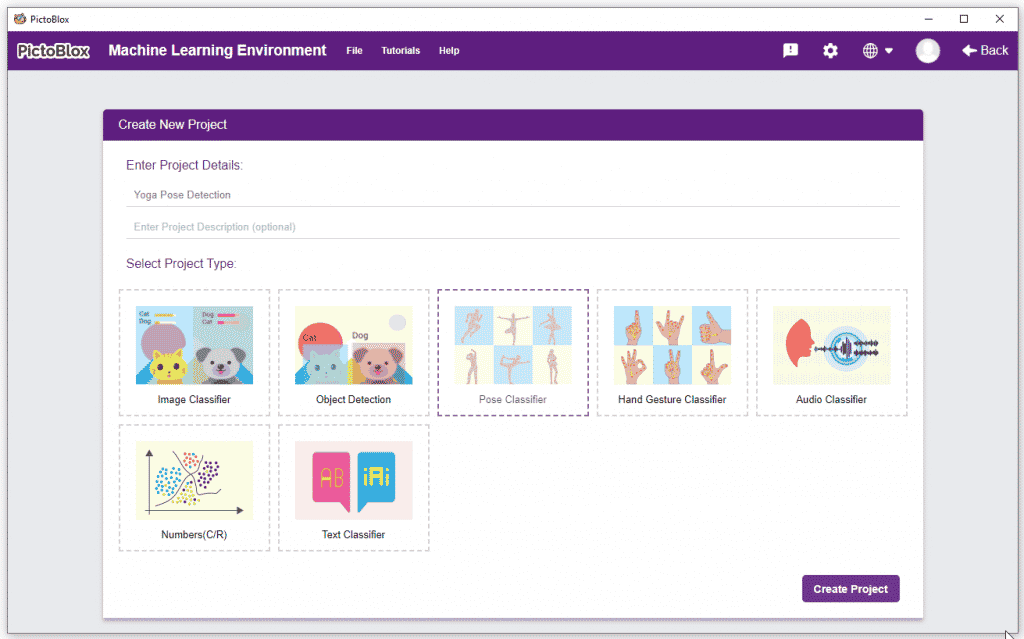

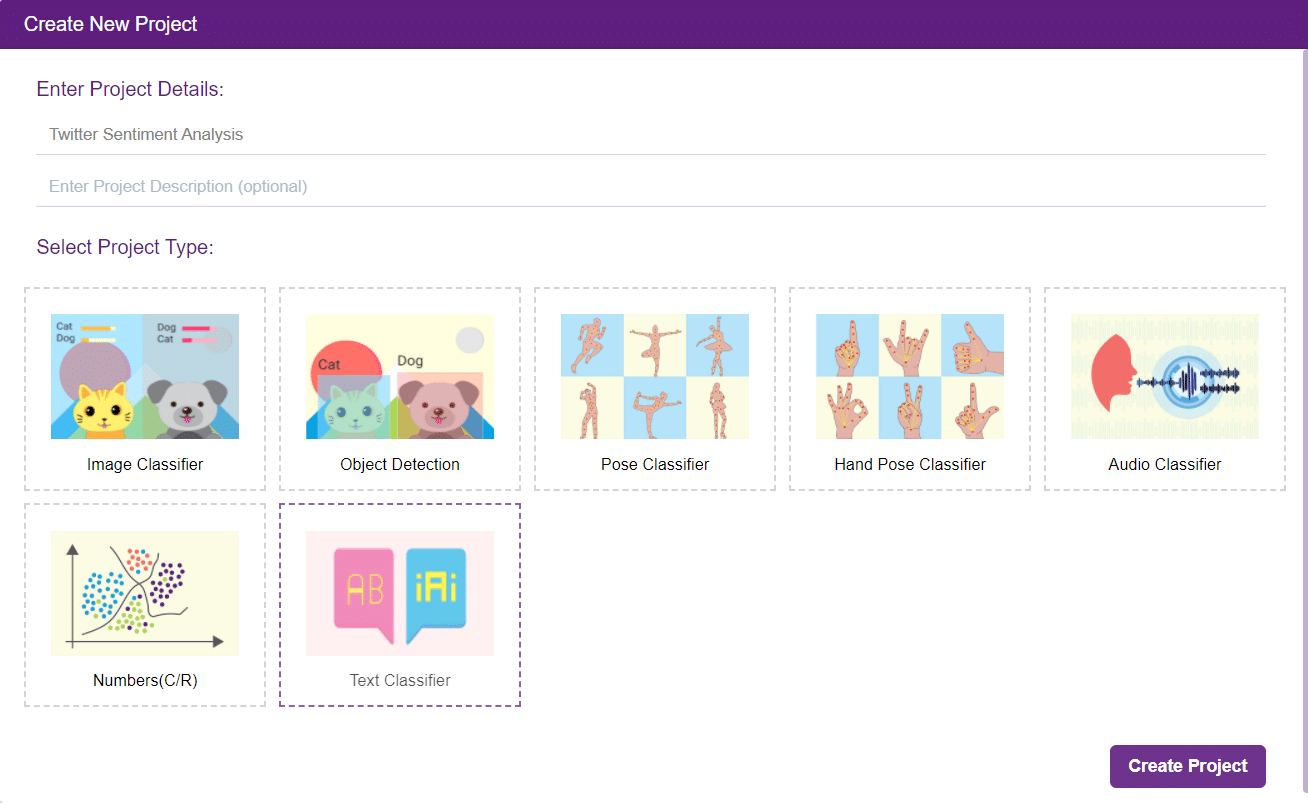

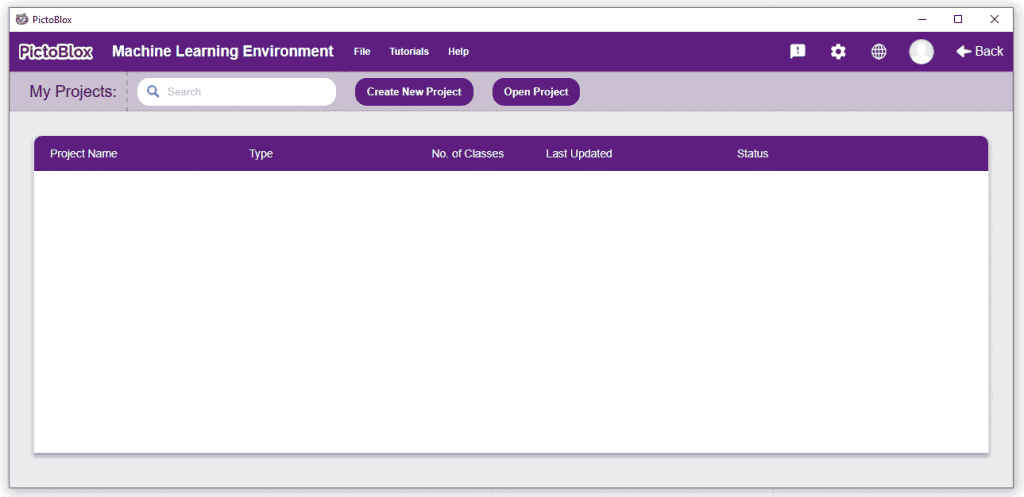

- Click on “Create New Project“.

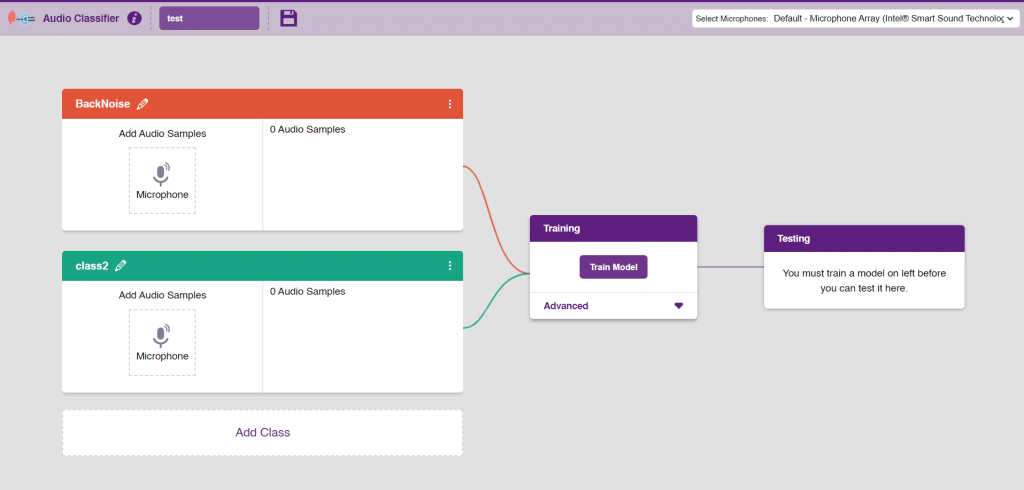

- A window will open. Type in a project name of your choice and select the “Hand Gesture Classifier” extension. Click the “Create Project” button to open the Hand Pose Classifier window.

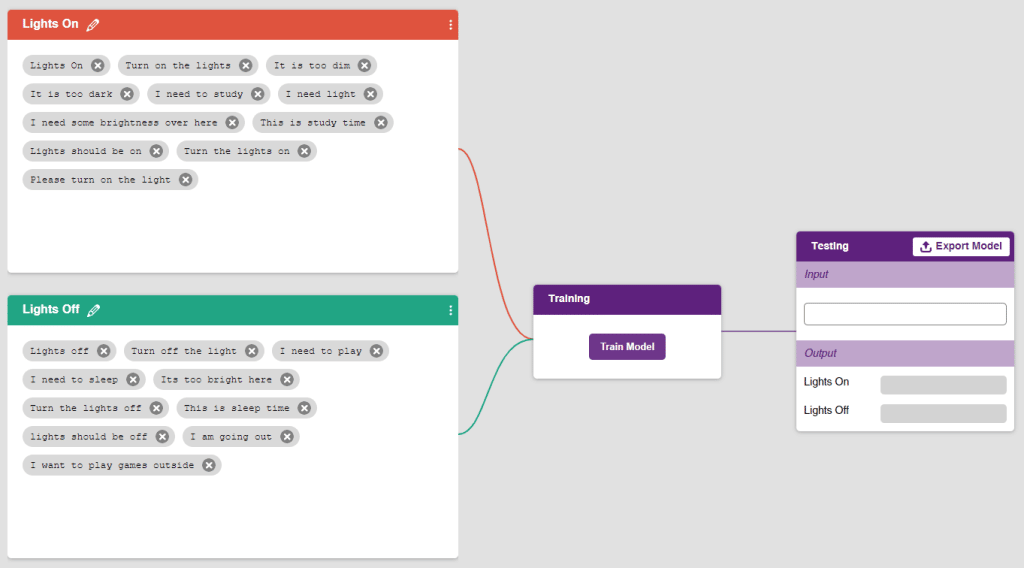

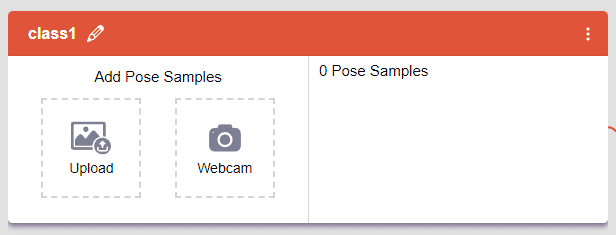

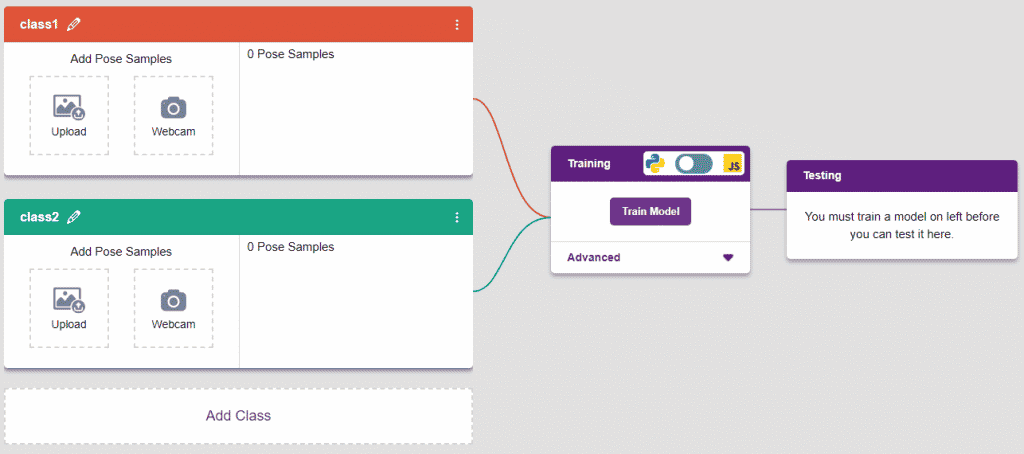

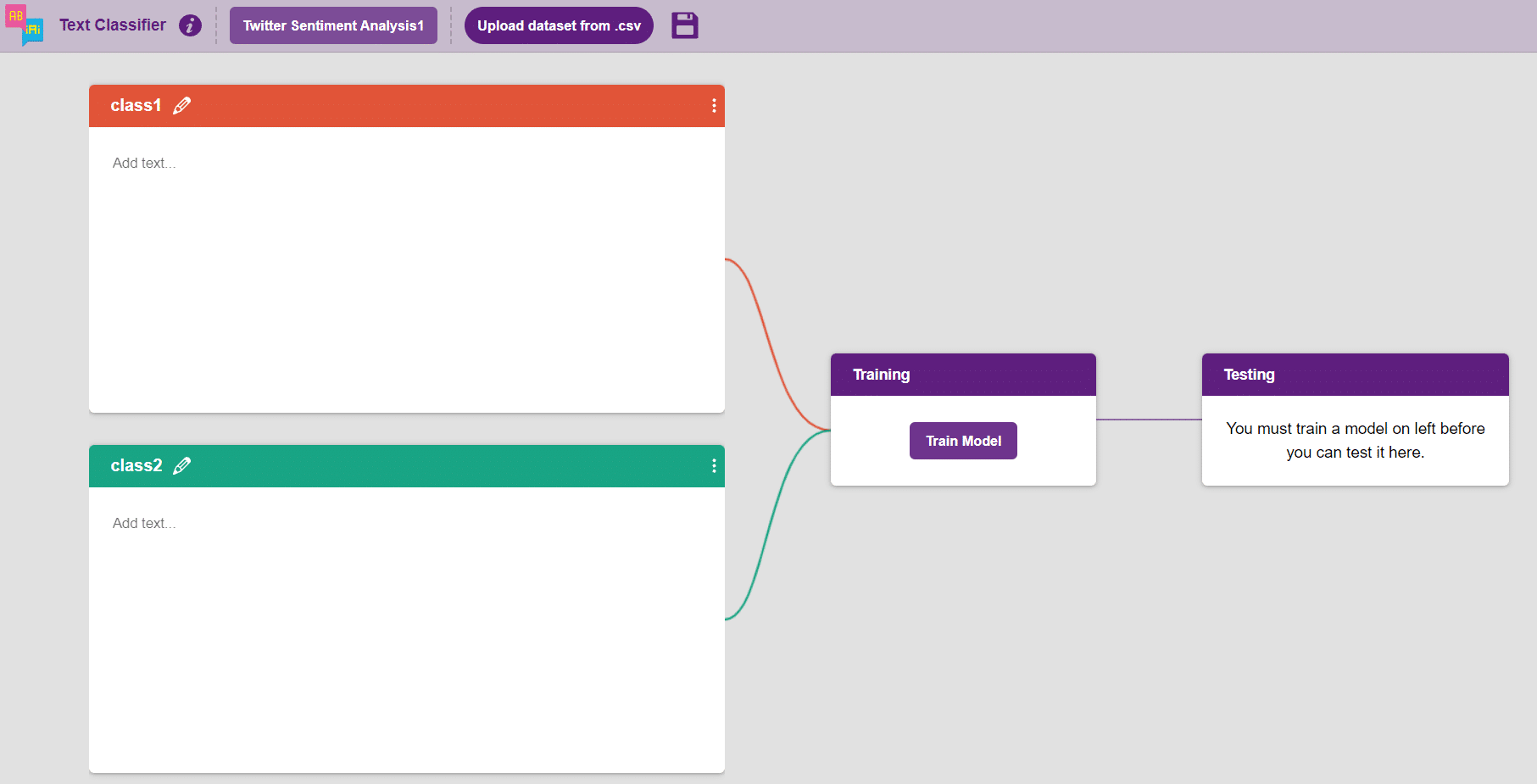

- You shall see the Classifier workflow with two classes already made for you. Your environment is all set. Now it’s time to upload the data.

Class in Hand Gesture Classifier

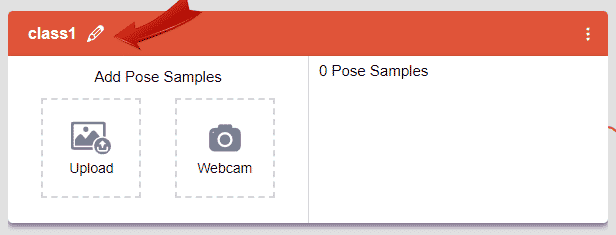

There are 2 things that you have to provide in a class:

- Class Name: It’s the name to which the class will be referred as.

- Hand Pose Data: This data can either be taken from the webcam or by uploading from local storage.

Note: You can add more classes to the projects using the Add Class button.

Adding Data to Class

You can perform the following operations to manipulate the data into a class.

- Naming the Class: You can rename the class by clicking on the edit button.

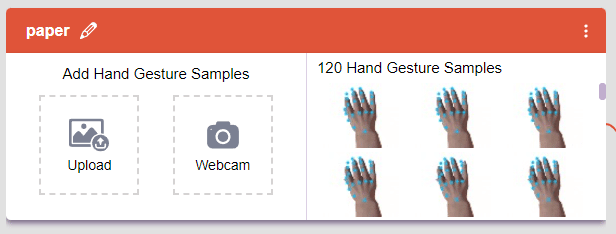

- Adding Data to the Class: You can add the data using the Webcam or by Uploading the files from the local folder.

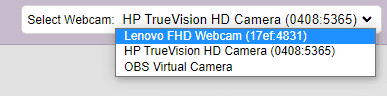

- Webcam:

- Webcam:

Note: You must add at least 20 samples to each of your classes for your model to train. More samples will lead to better results.

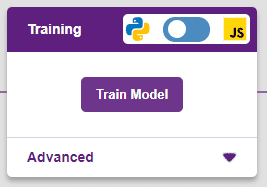

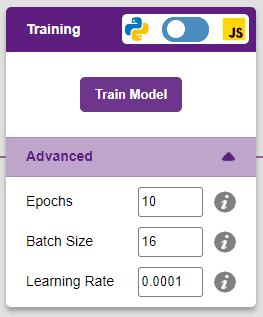

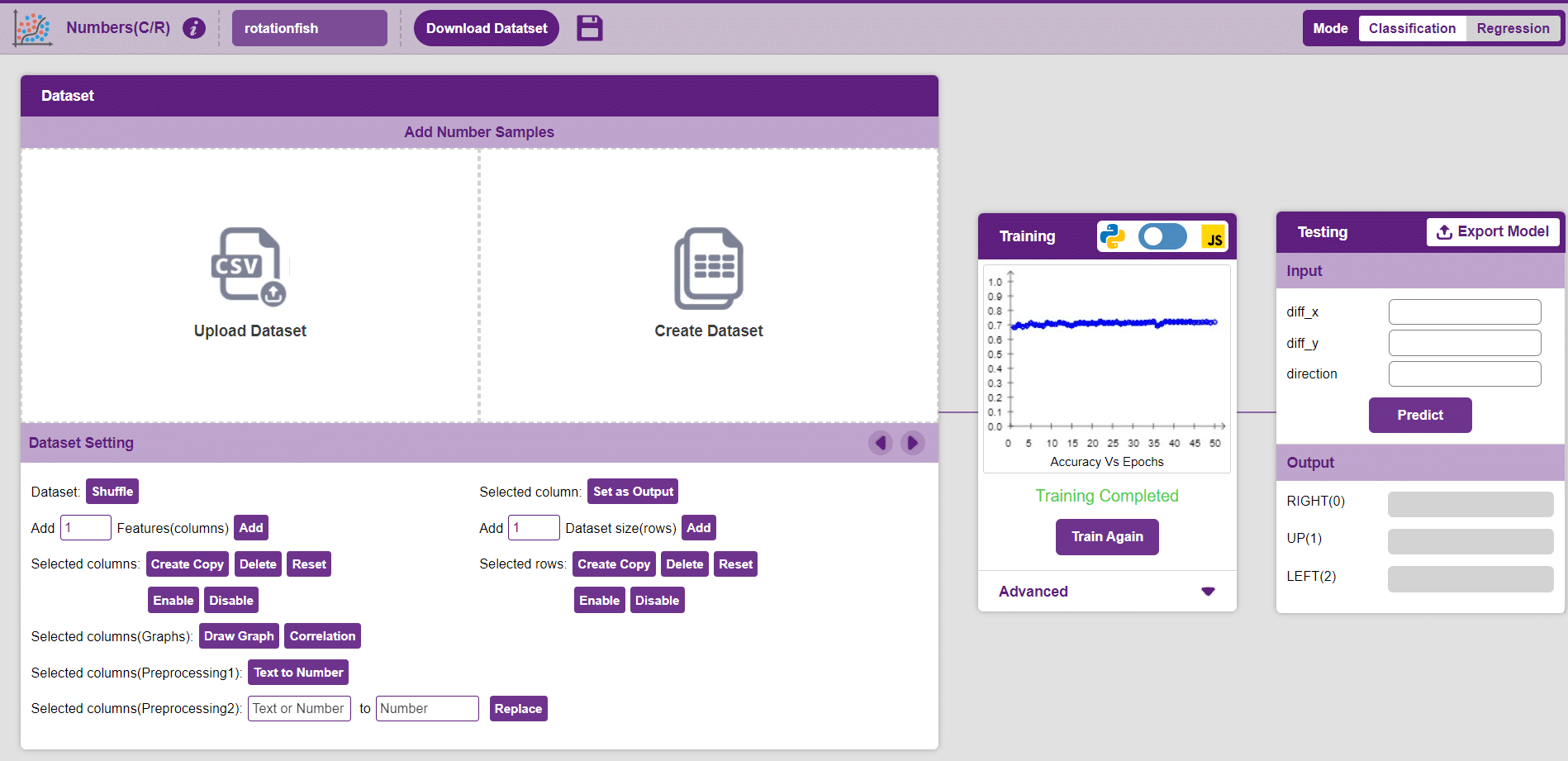

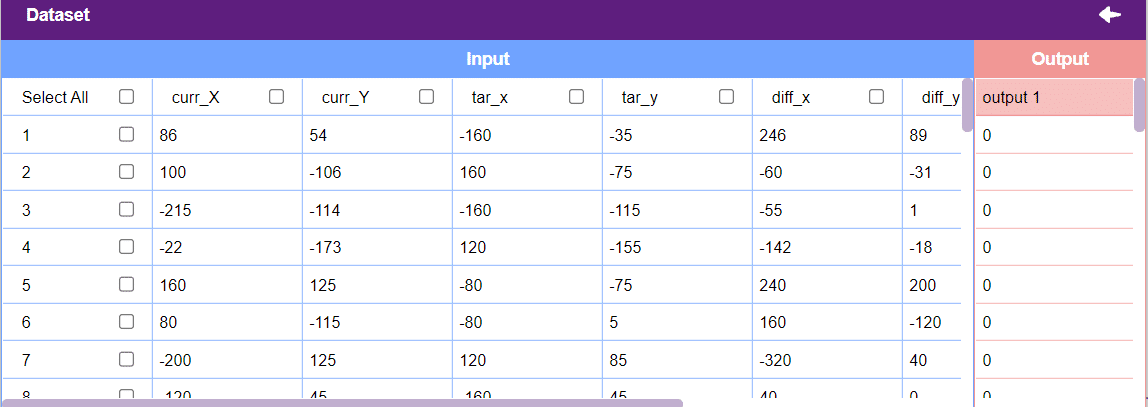

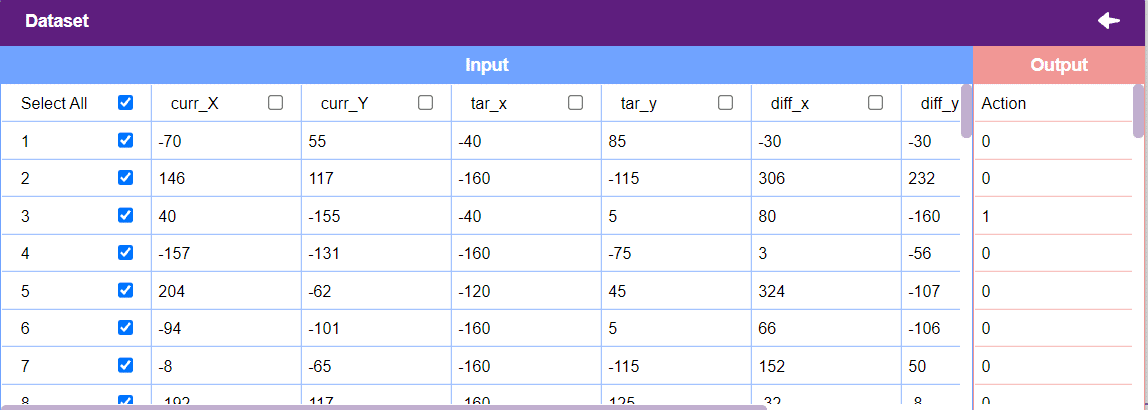

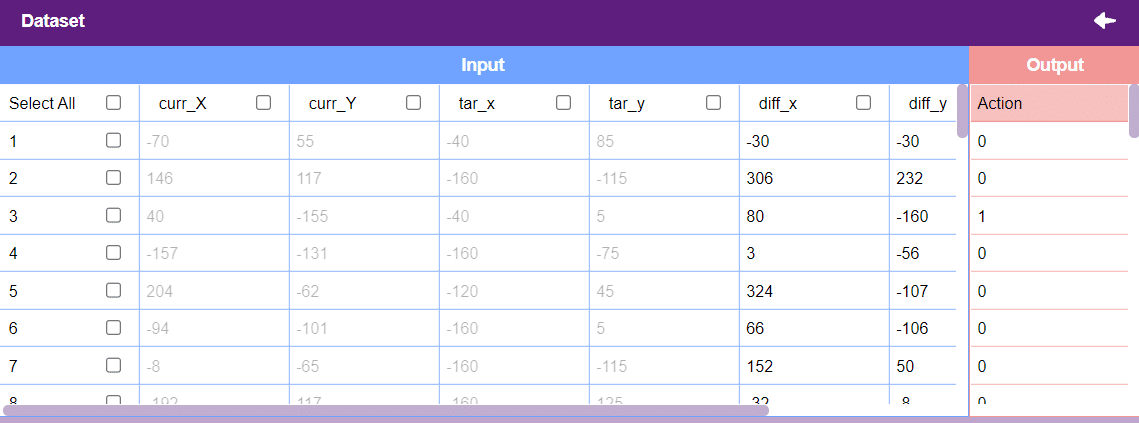

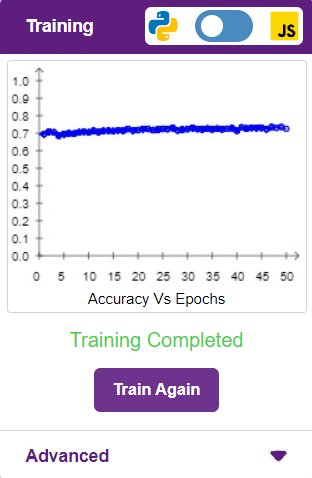

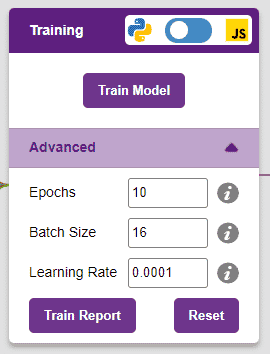

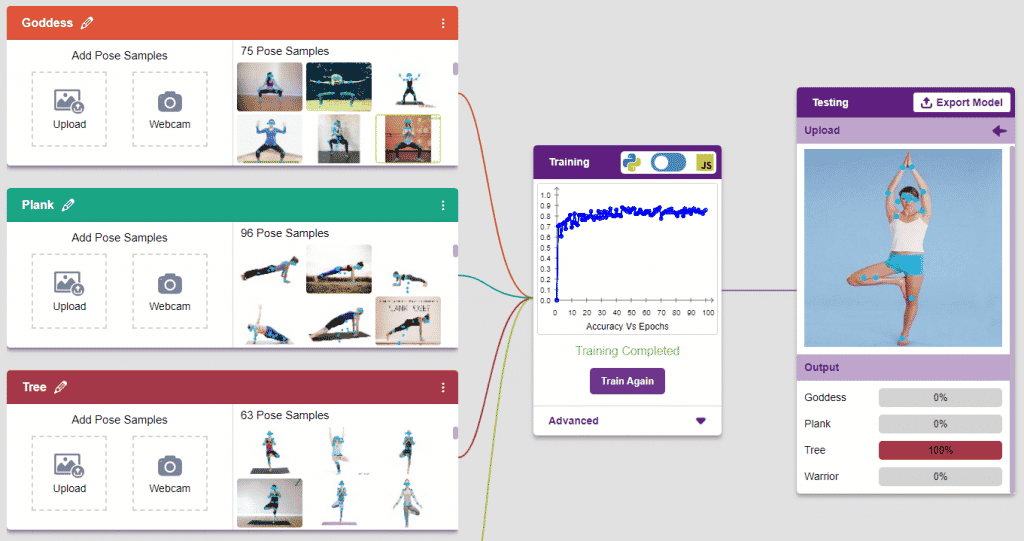

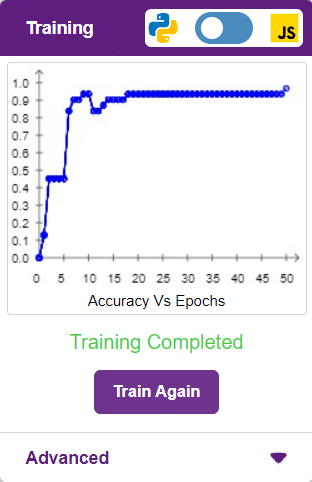

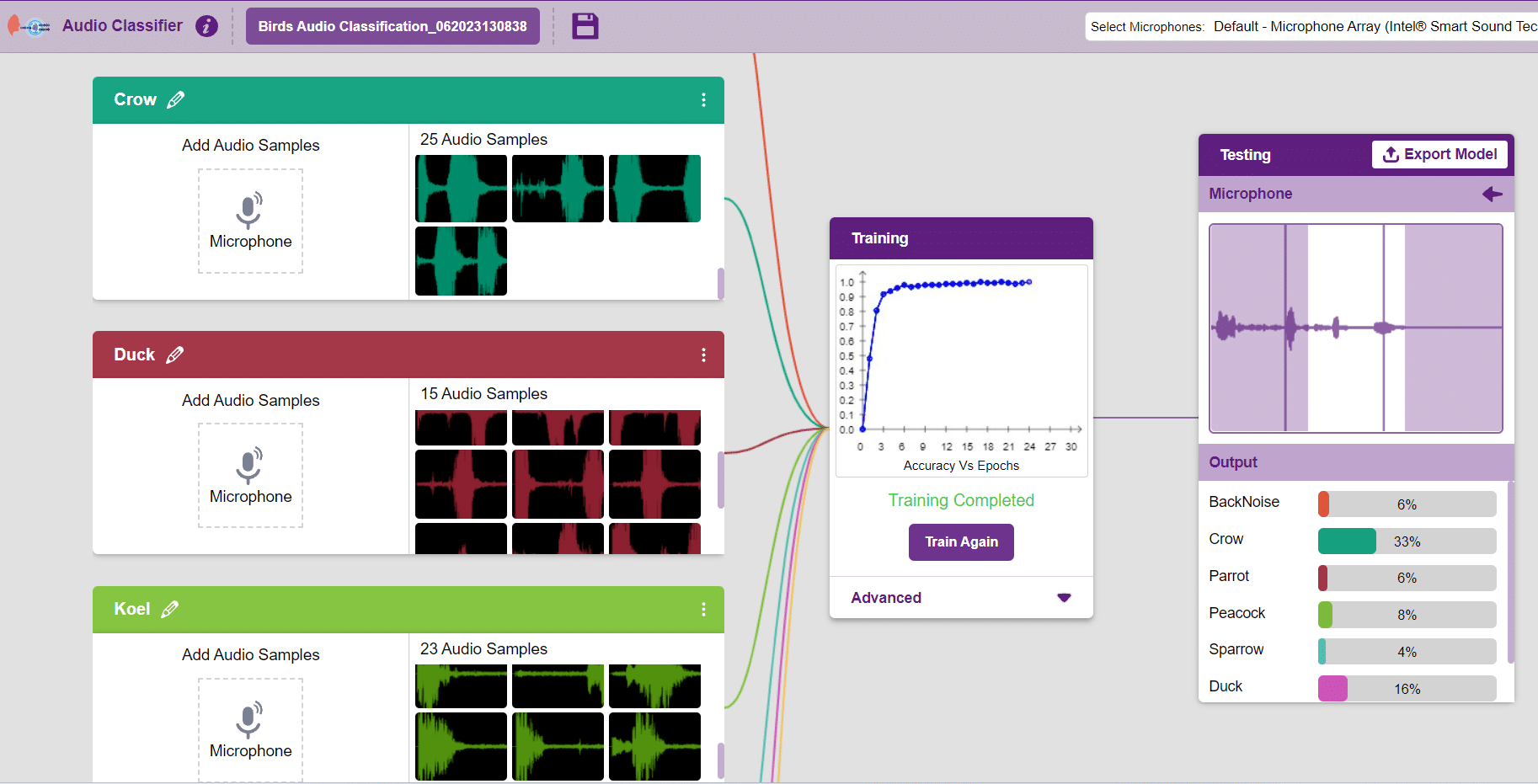

Training the Model

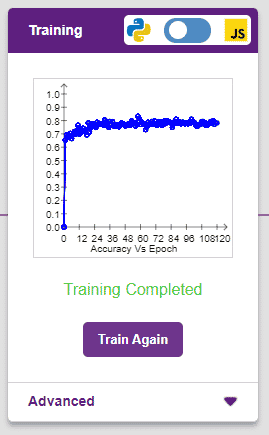

After data is added, it’s fit to be used in model training. In order to do this, we have to train the model. By training the model, we extract meaningful information from the hand pose, and that in turn updates the weights. Once these weights are saved, we can use our model to make predictions on data previously unseen.

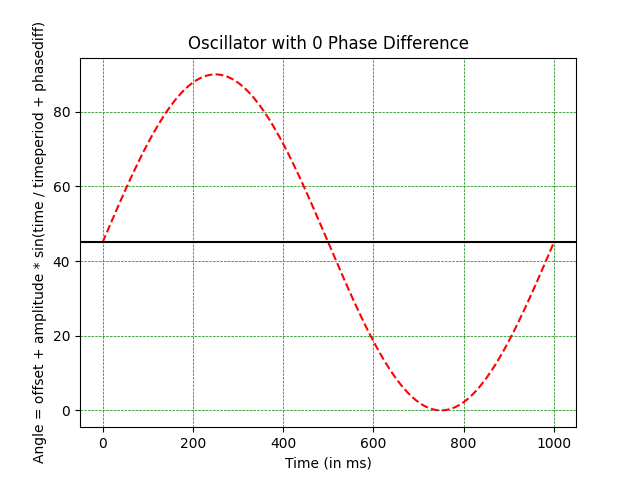

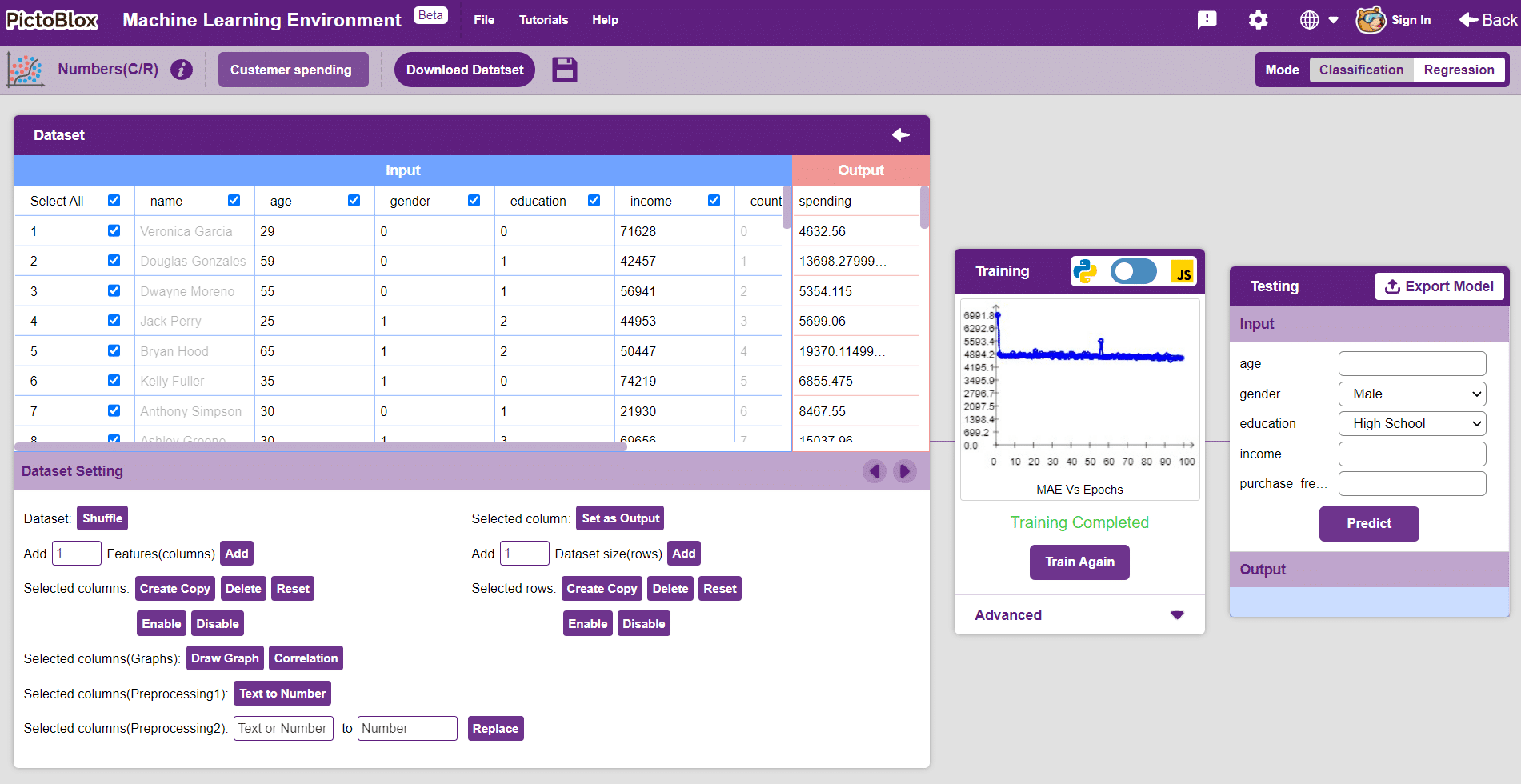

The accuracy of the model should increase over time. The x-axis of the graph shows the epochs, and the y-axis represents the accuracy at the corresponding epoch. Remember, the higher the reading in the accuracy graph, the better the model. The range of the accuracy is 0 to 1.

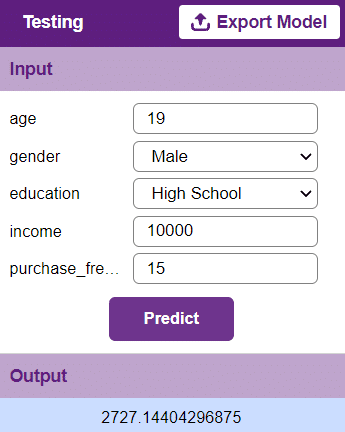

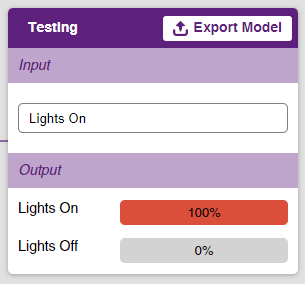

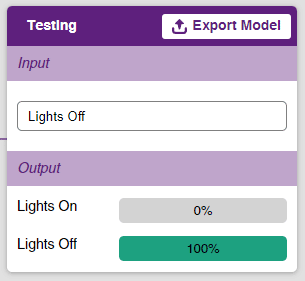

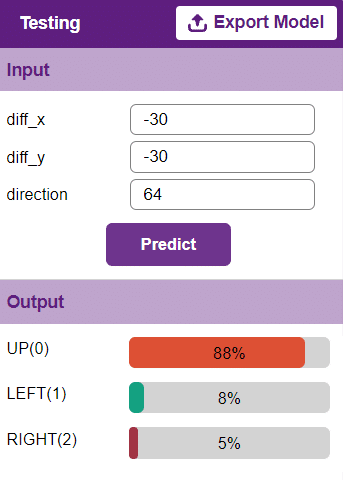

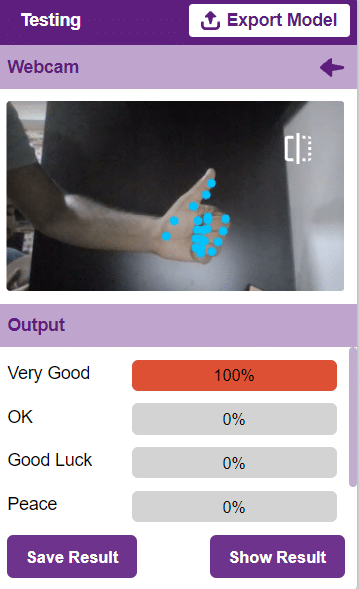

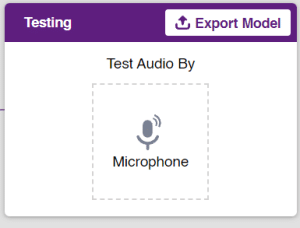

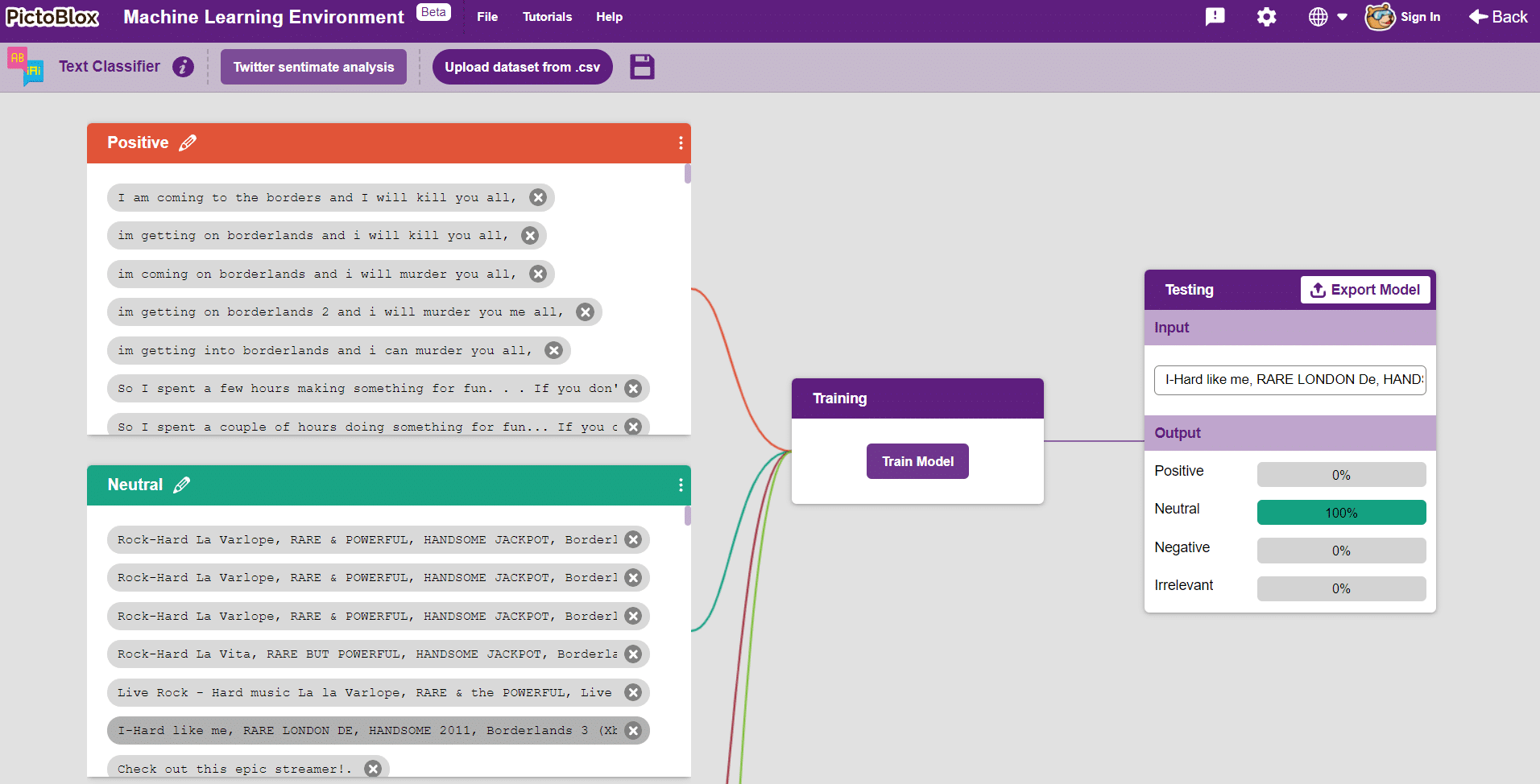

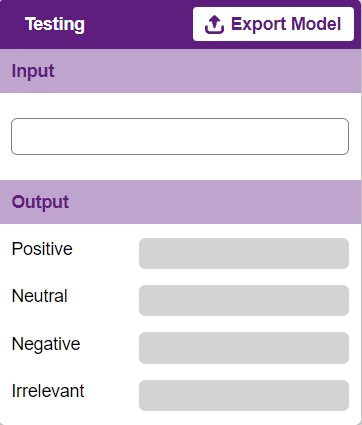

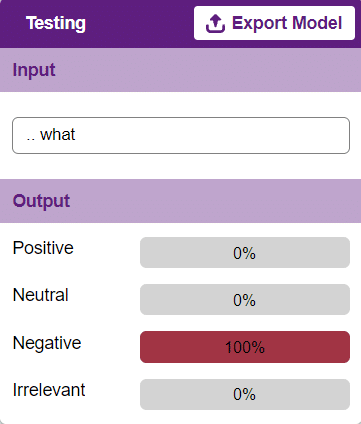

Testing the Model

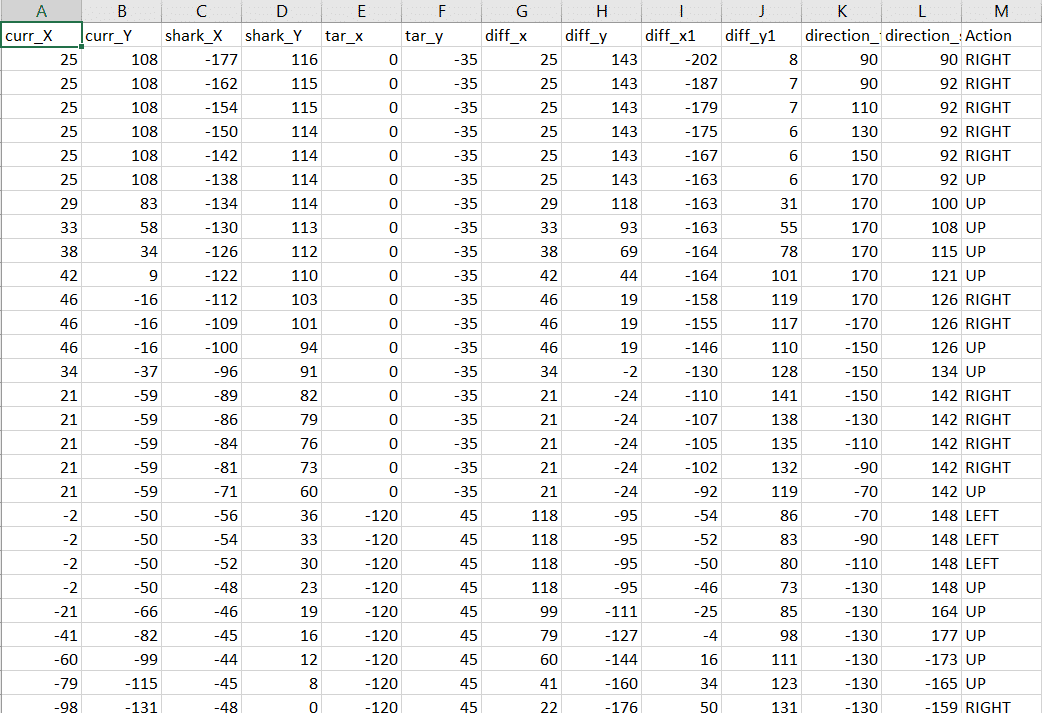

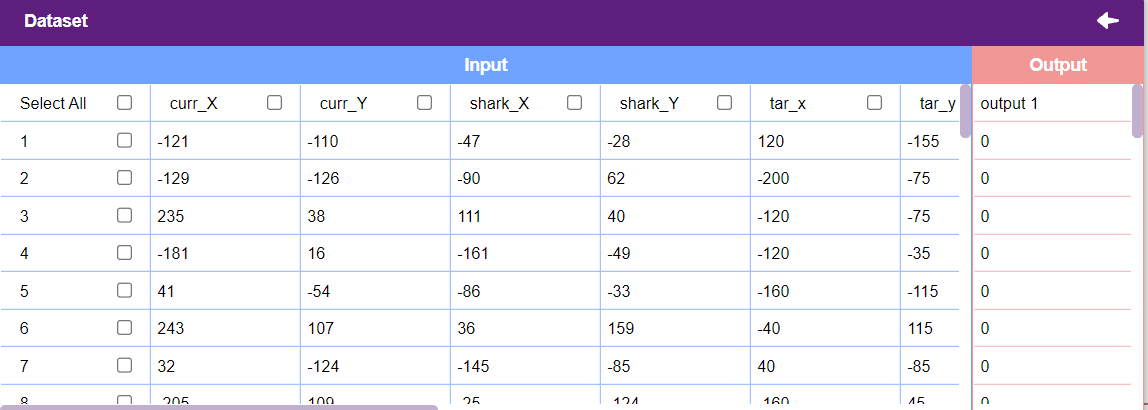

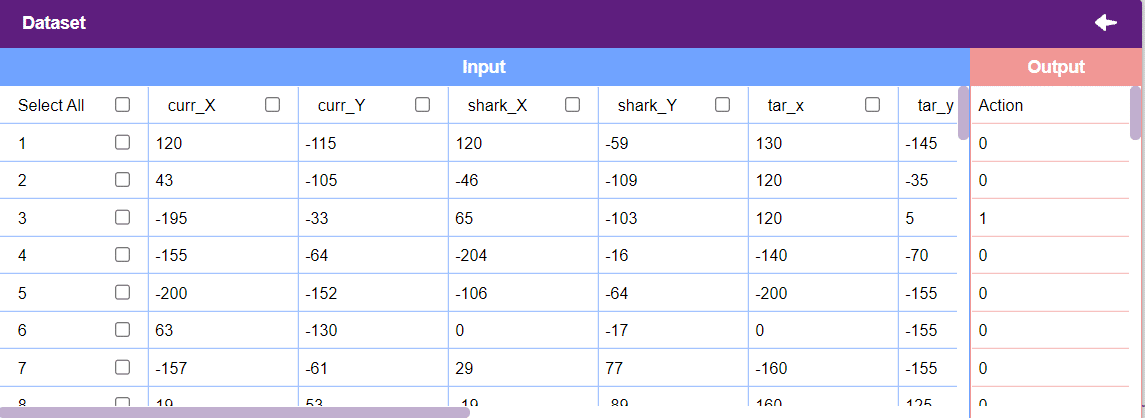

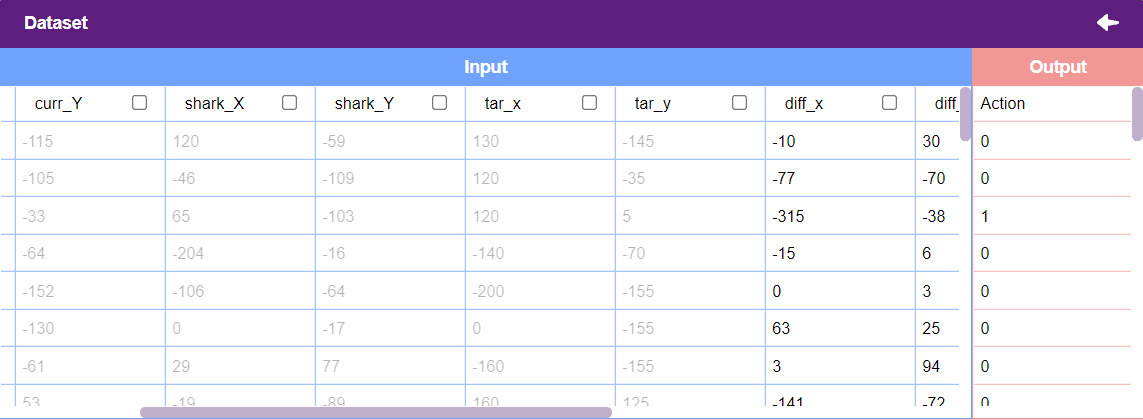

To test the model, simply enter the input values in the “Testing” panel and click on the “Predict” button.

The model will return the probability of the input belonging to the classes.

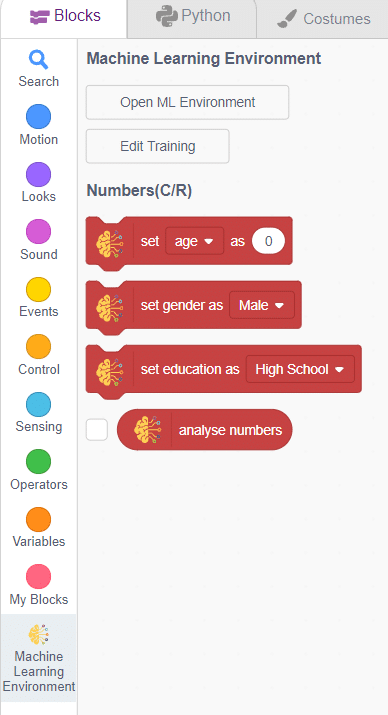

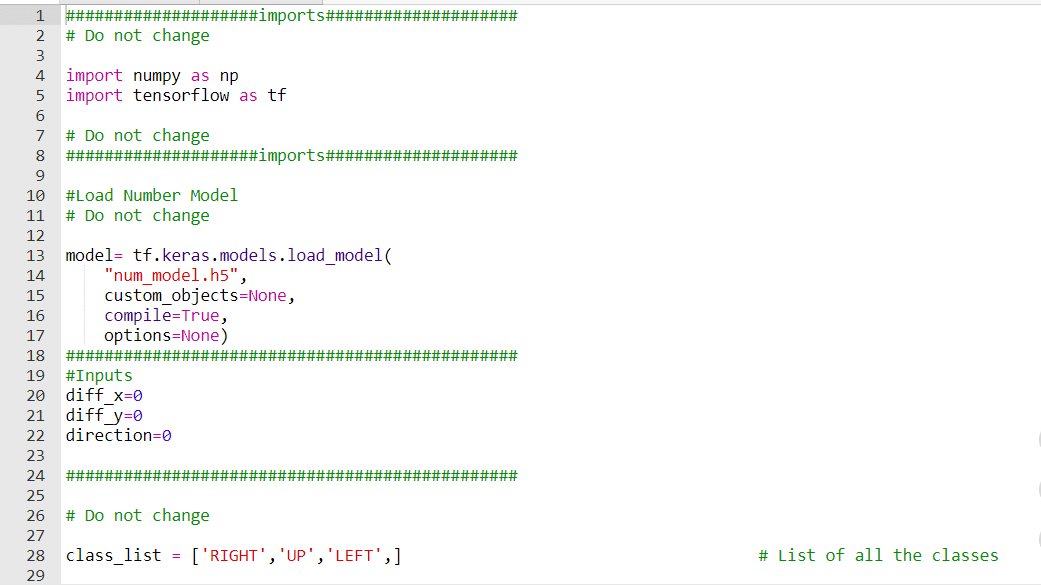

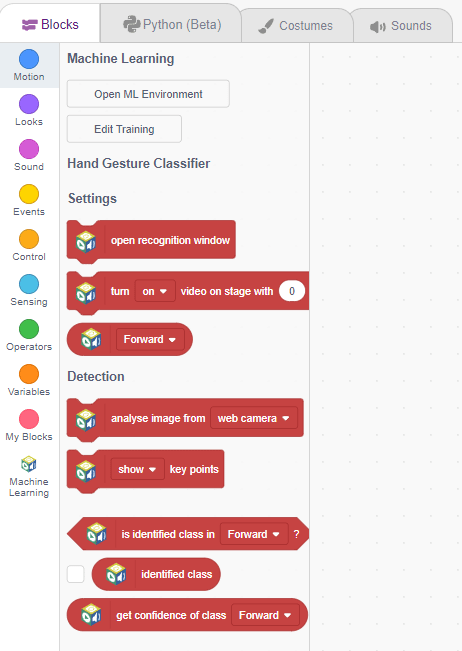

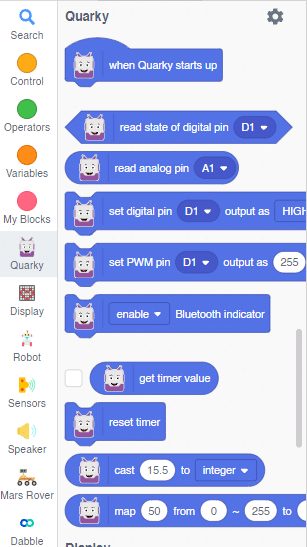

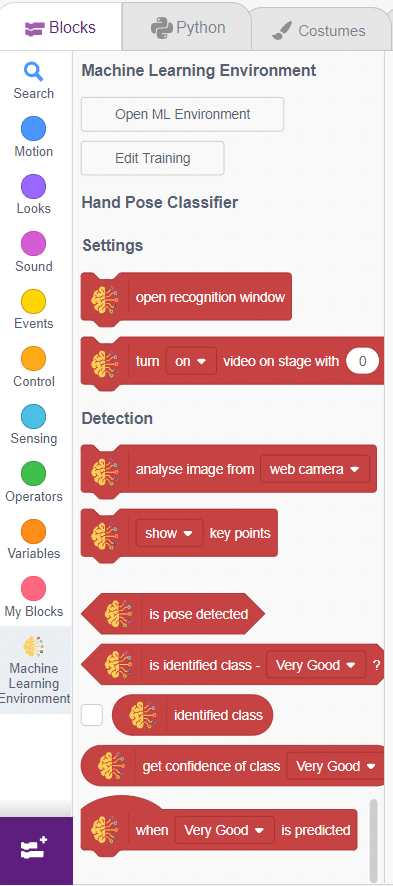

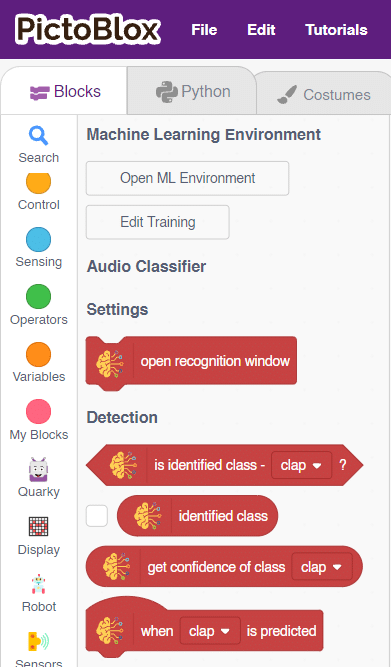

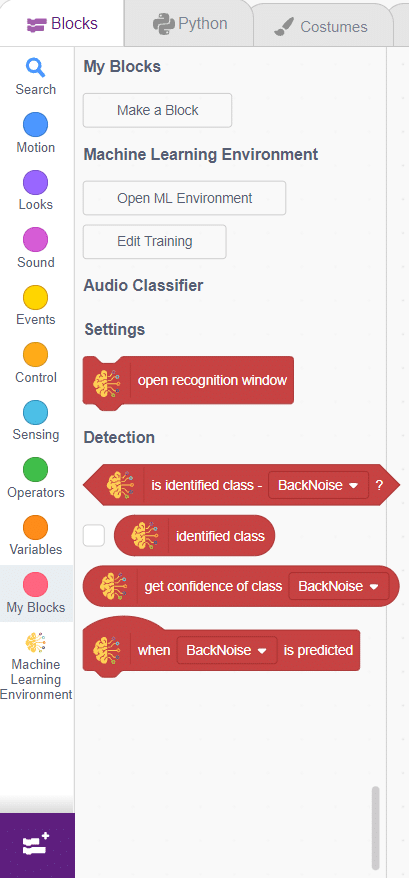

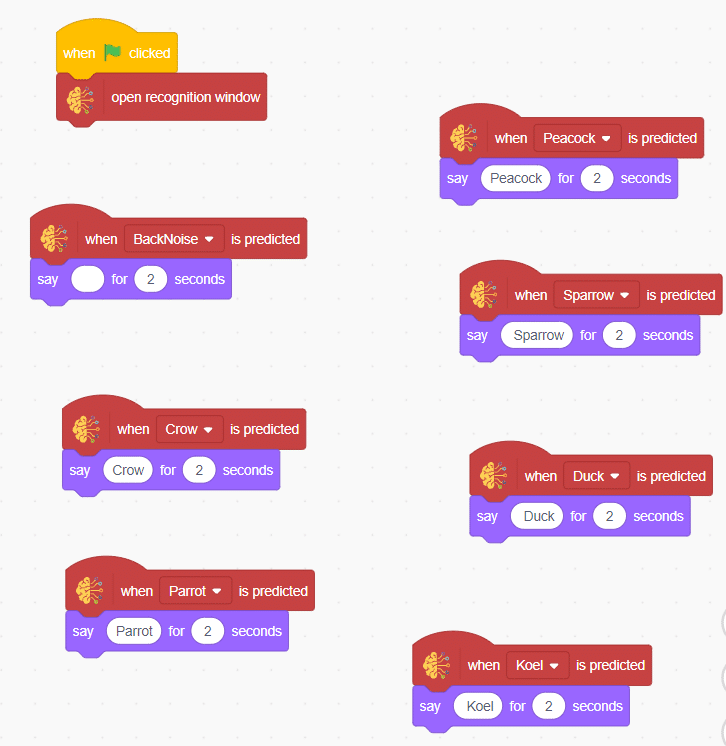

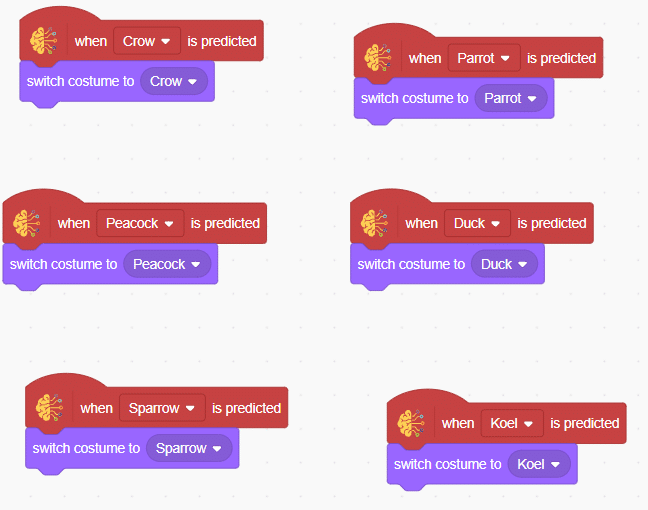

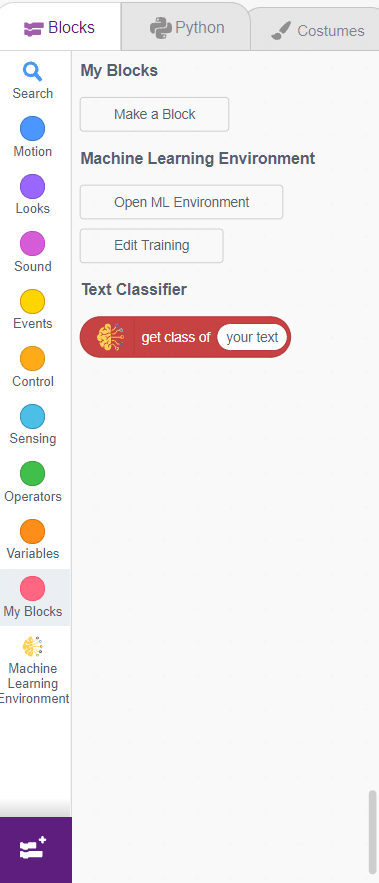

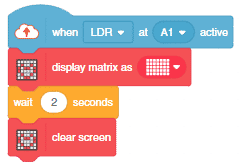

Export in Block Coding

Click on the “Export Model” button on the top right of the Testing box, and PictoBlox will load your model into the Block Coding Environment if you have opened the ML Environment in the Block Coding.

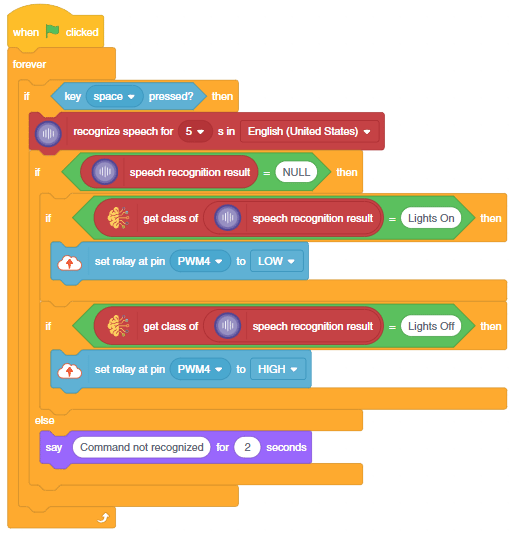

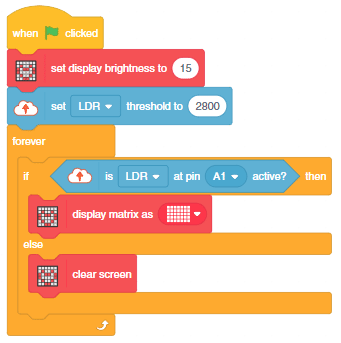

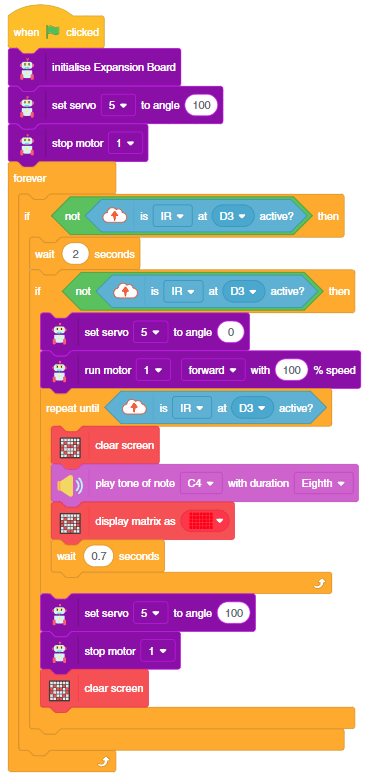

Logic

The Mars Roverwill move according to the following logic:

- When the forward gesture is detected – Mars Rover will move forward.

- When the backward gesture is detected –Mars Rover will move backward.

- When the left gesture is detected –Mars Rover will turn left.

- When the right gesture is detected – Mars Rover will turn right.

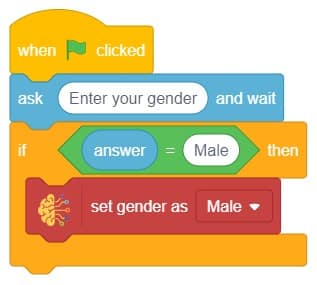

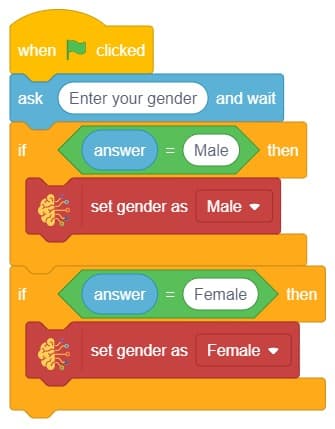

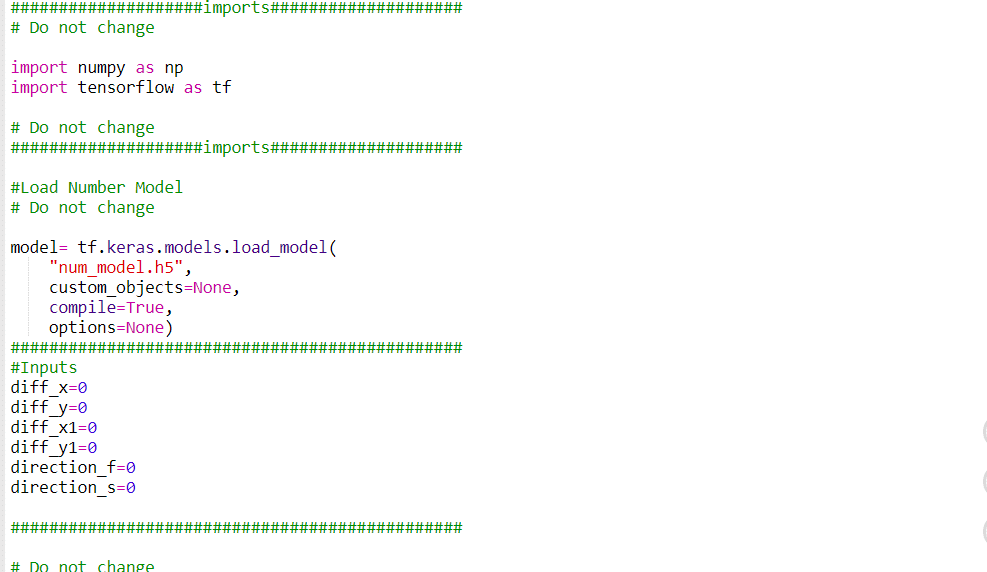

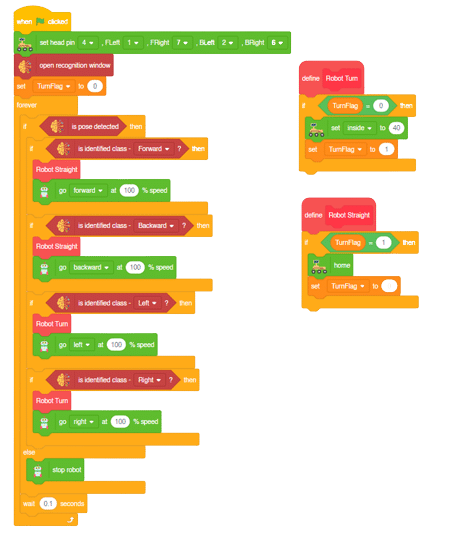

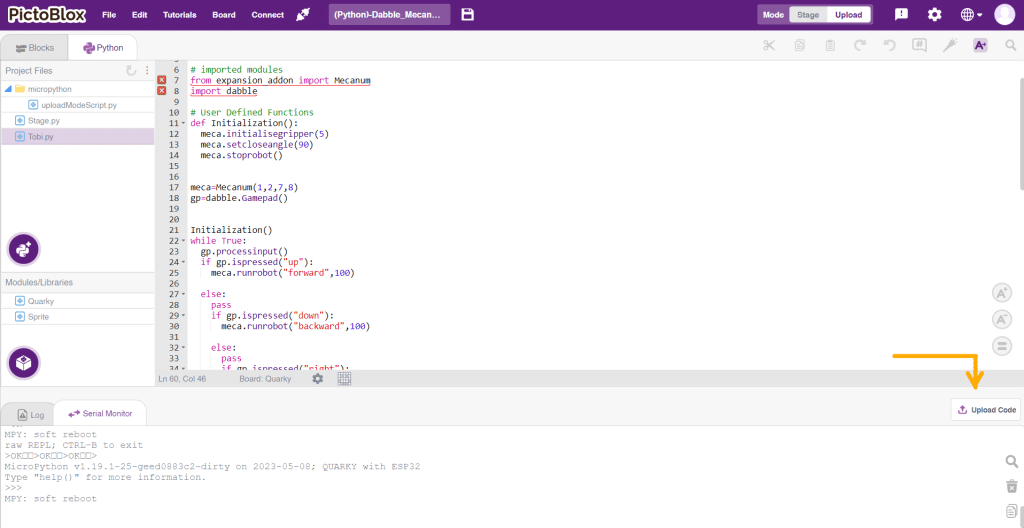

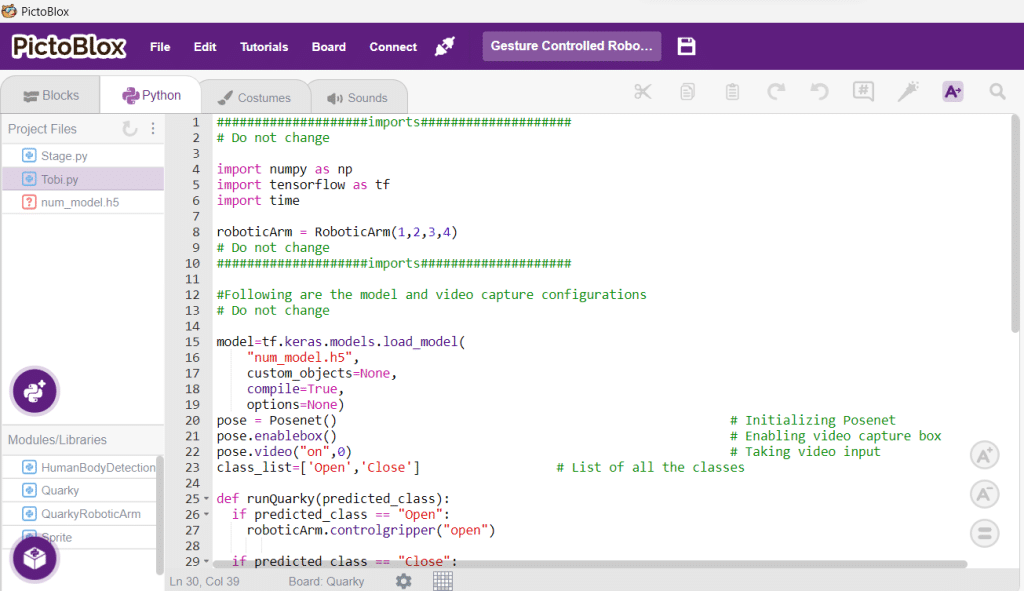

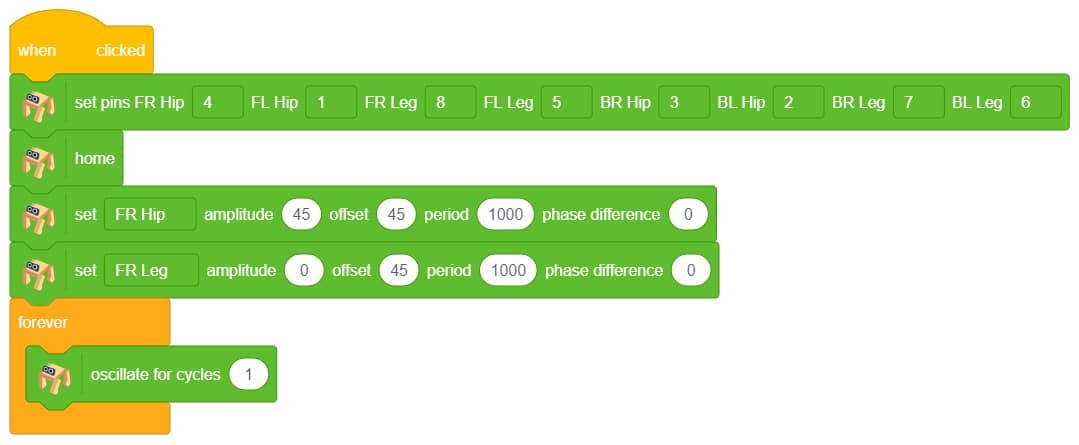

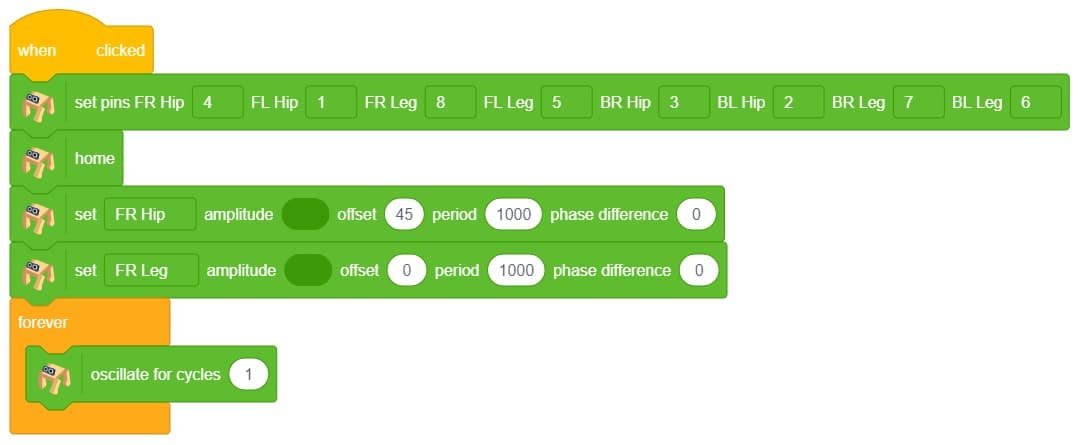

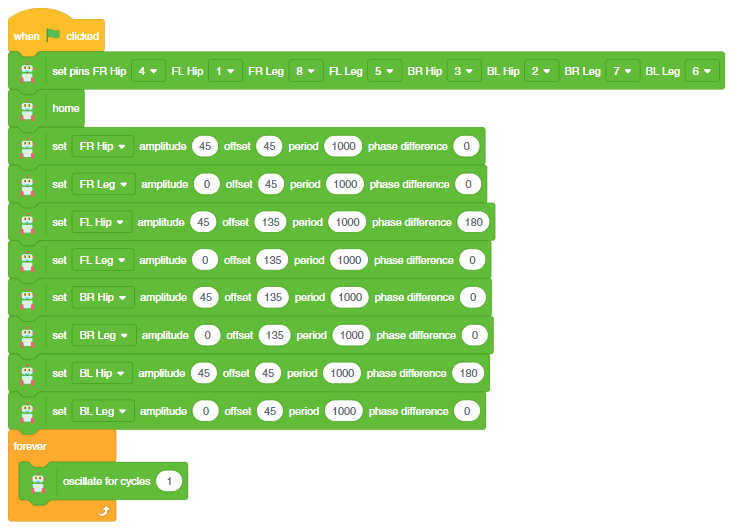

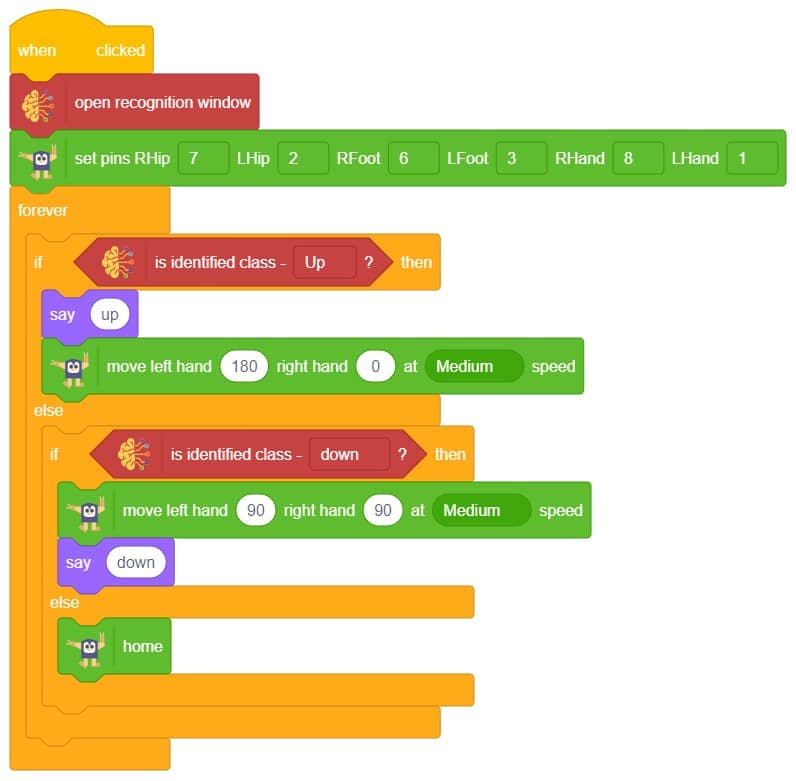

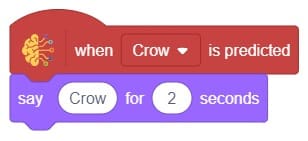

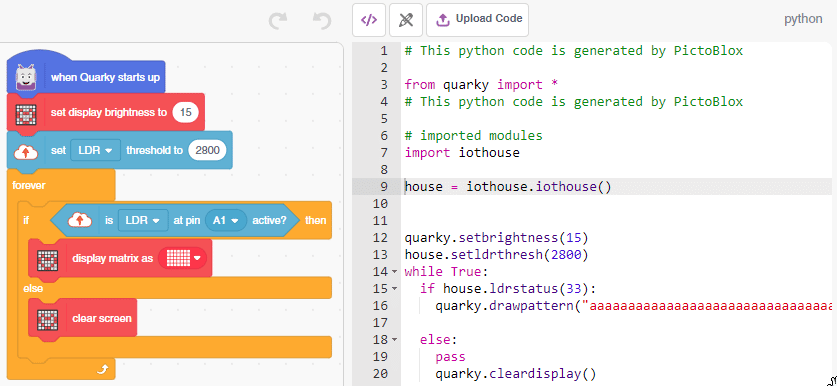

Code

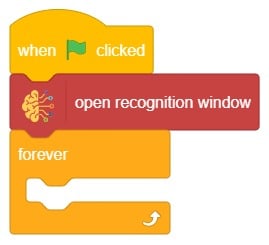

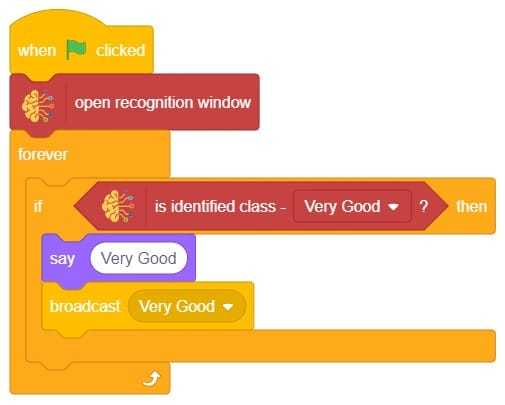

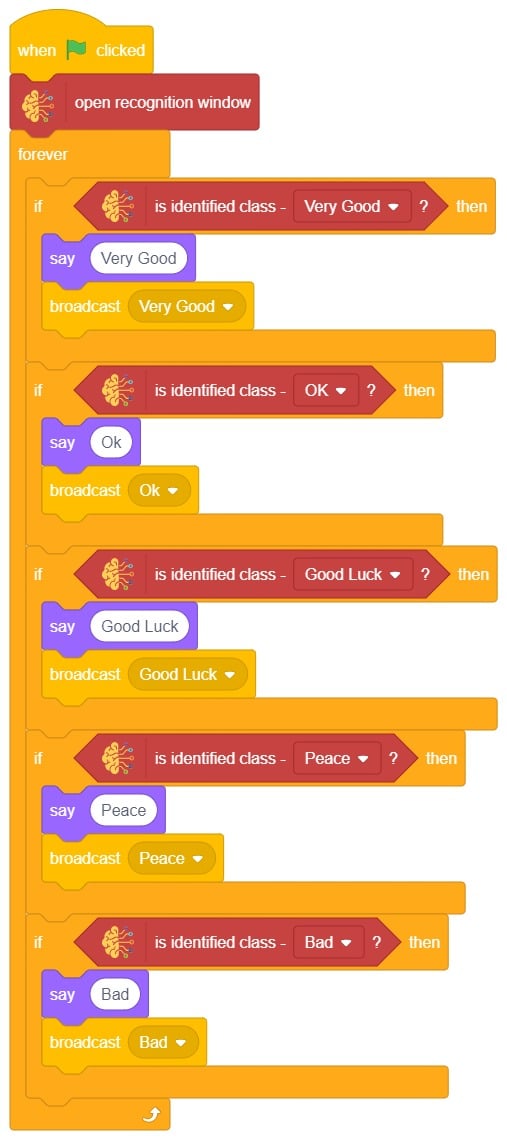

Logic

- First, we will initialize different Gesture classes.

- Then, we will open the recognition window, which will identify different poses and turn on the camera with a certain level of transparency to identify images from the stage.

- If the identified class from the analyzed image is “forward,” the Mars Rover will move forward at a specific speed.

- If the identified class is “backward,” the Mars Rover will move backward.

- If the identified class is “left,” the Mars Rover will move left.

- If the identified class is “right,” the Mars Rover will move right.

- Otherwise, the Mars Rover will be in the home position.

Output

Read More

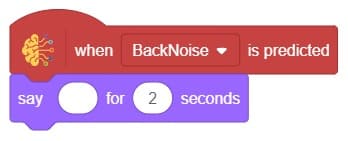

Note: You can add even more classes with different types of differentiating sounds to customize your control. This is just a small example from which you can build your own Sound Based Controlled Humanoid in a very easy stepwise procedure.

Note: You can add even more classes with different types of differentiating sounds to customize your control. This is just a small example from which you can build your own Sound Based Controlled Humanoid in a very easy stepwise procedure.

Click on “Create New Project“.

Click on “Create New Project“.