The block sets the user API key of the ChatGPT in the project.

How to get the API Key?

Follow the process the get your own API key.

- Go to the Open AI website: https://platform.openai.com/overview

- Create Open AI Account:

- Click on Signup.

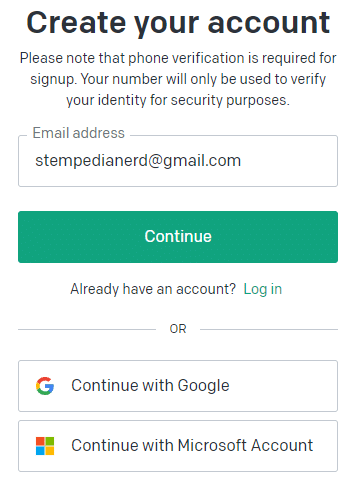

- Enter your email ID and click on Continue.

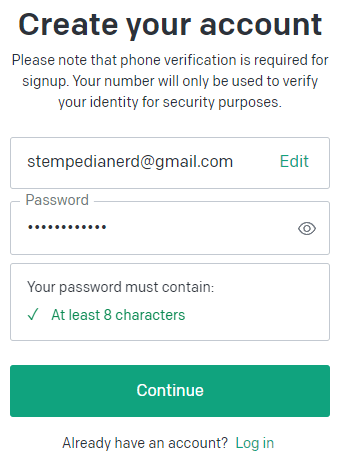

- Enter the password and click on Continue.

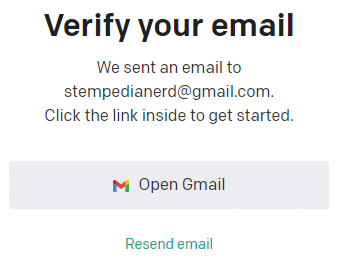

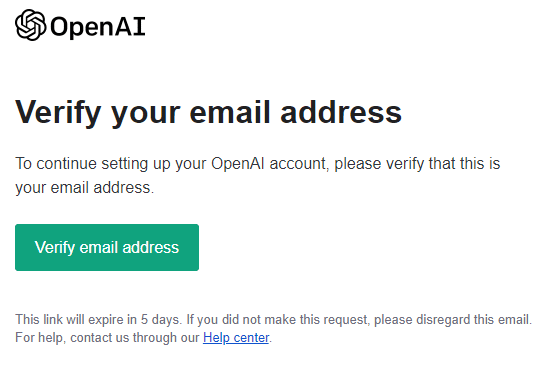

- Verify your email ID.

- Go to your email inbox and check your email from Open AI. Click on Verify Email Address.

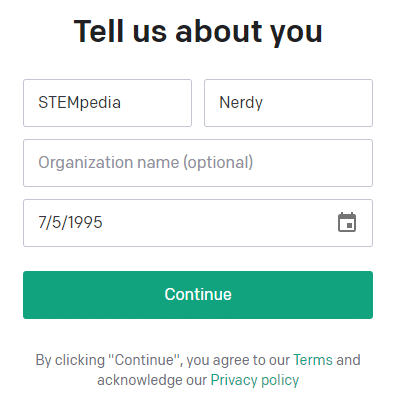

- Next, you have to enter details about yourself. Fill up and click on Continue.

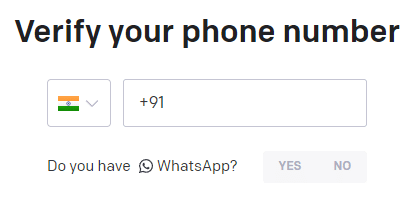

- Next, verify your number.

- Once verified you will be automatically logged in on the website.

- Click on Signup.

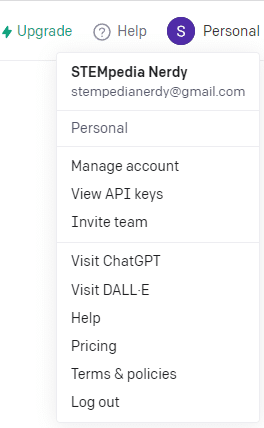

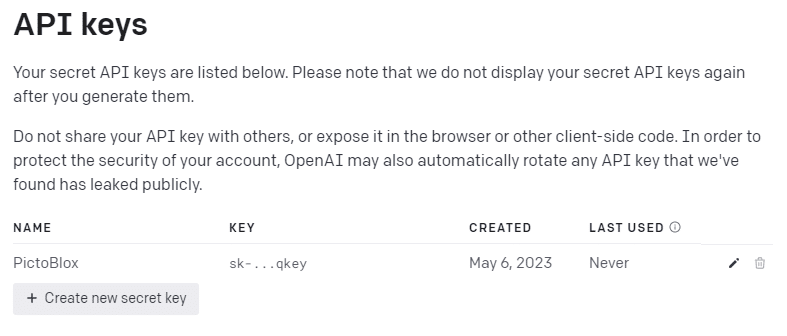

- Click on the Personal tab in the right top corner and select View API keys.

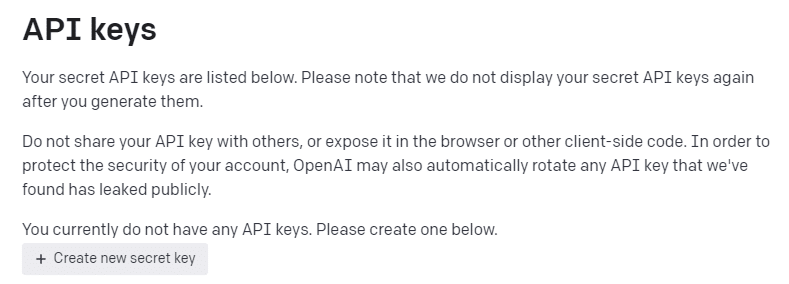

- You will be able to see all the API keys here. Click on Create new secret key button.

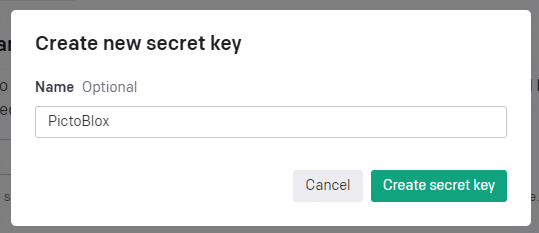

- Enter the secret key name of your choice and click on Create secret key.

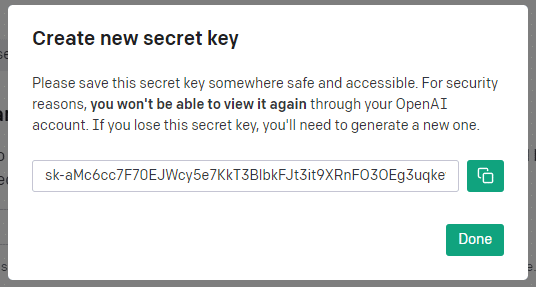

- The key will be generated. Copy it and save it in a text file on your computer for future use. Click on Done.

- You can see the API key generated on the page.

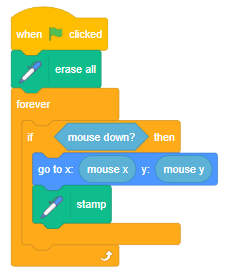

- Next, in PictoBlox you can add the API key in the set ChatGPT API to () block. This will set the API key for the project.