Object Detection (ML)

-

Available in: Block Coding, Python Coding

Available in: Block Coding, Python Coding

-

Mode: Stage Mode

Mode: Stage Mode

-

WiFi Required: No

WiFi Required: No

-

Compatible Hardware in Block Coding: evive, Quarky, Arduino Uno, Arduino Mega, Arduino Nano, ESP32, T-Watch, Boffin, micro:bit, TECbits, LEGO EV3, LEGO Boost, LEGO WeDo 2.0, Go DFA, None

Compatible Hardware in Block Coding: evive, Quarky, Arduino Uno, Arduino Mega, Arduino Nano, ESP32, T-Watch, Boffin, micro:bit, TECbits, LEGO EV3, LEGO Boost, LEGO WeDo 2.0, Go DFA, None

-

Compatible Hardware in Python: Quarky, None

Compatible Hardware in Python: Quarky, None

-

Object Declaration in Python: Not Applicable

Object Declaration in Python: Not Applicable

-

Extension Catergory: ML Environment

Extension Catergory: ML Environment

Introduction

The Object Detection extension of the PictoBlox Machine Learning Environment is used to detect particular targets present in a given picture.

Tutorial on using Object Detection (ML) in Block Coding

Tutorial on using Object Detection (ML) in Python Coding

Image Classification vs Object Detection

There is always confusion between image classification and object detection. Let’s look at it.

Image Classification deals with categorizing images based on their characteristics. These characteristics are observed and extracted from the images by the training model. Once extracted, these characteristics can be used as a set of rules to classify previously unseen data. Let’s understand this with an example.

Observe these images:

Image 1:

Image 2:

Thanks to the human brain, it’s no big feat for us to make out that Image 1 is that of a cat and Image 2 is that of a dog. However, computers do not possess the intelligence we do, hence we need to train them to recognize such images. The same is true for any scenario where we want the computer to categorize data.

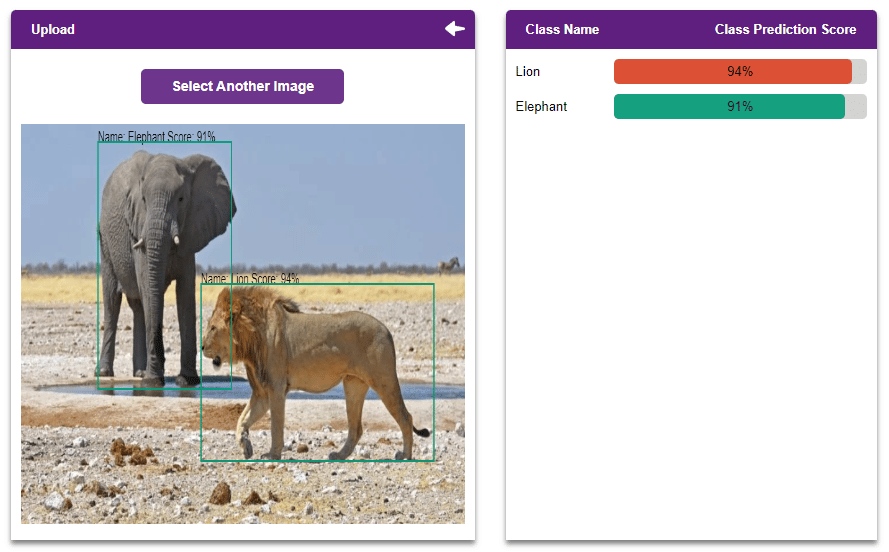

Now observe this image:

Again, our human brain doesn’t break a sweat in making out that there are two animals in the picture, one cat, and one dog. However, a computer trained to recognize cats and dogs separately will not be able to make complete sense of the image. This is where object detection comes into play.

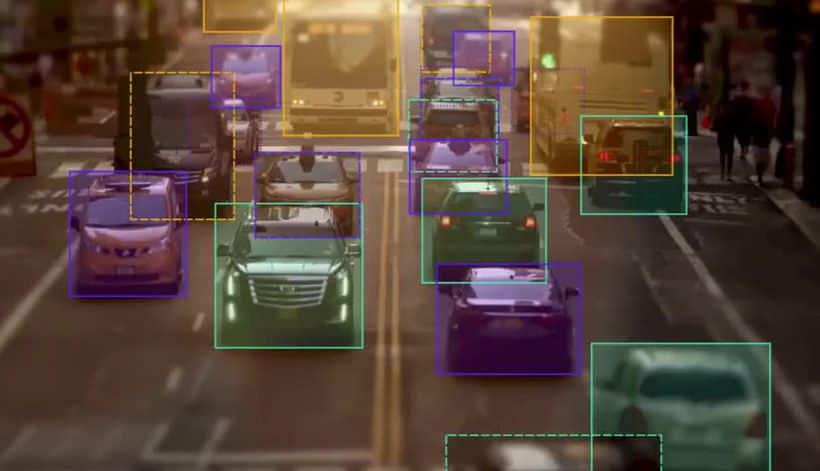

The biggest characteristic of Object Detection is the fact that it takes the location of objects into consideration. Object Detection algorithms can detect not only the class of the target objects but also their location.

Hence, Object Detection is extremely useful when there are multiple objects present in an image.

Opening Image Classifier Workflow

Follow the steps below:

- Open PictoBlox and create a new file.

- Select the appropriate Coding Environment.

- Select the “Open ML Environment” option under the “Files” tab to access the ML Environment.

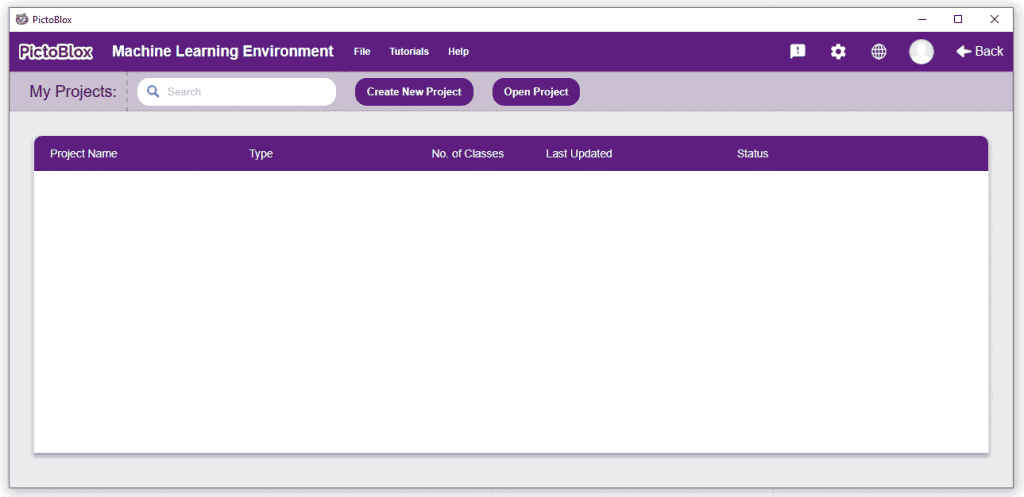

- You’ll be greeted with the following screen.

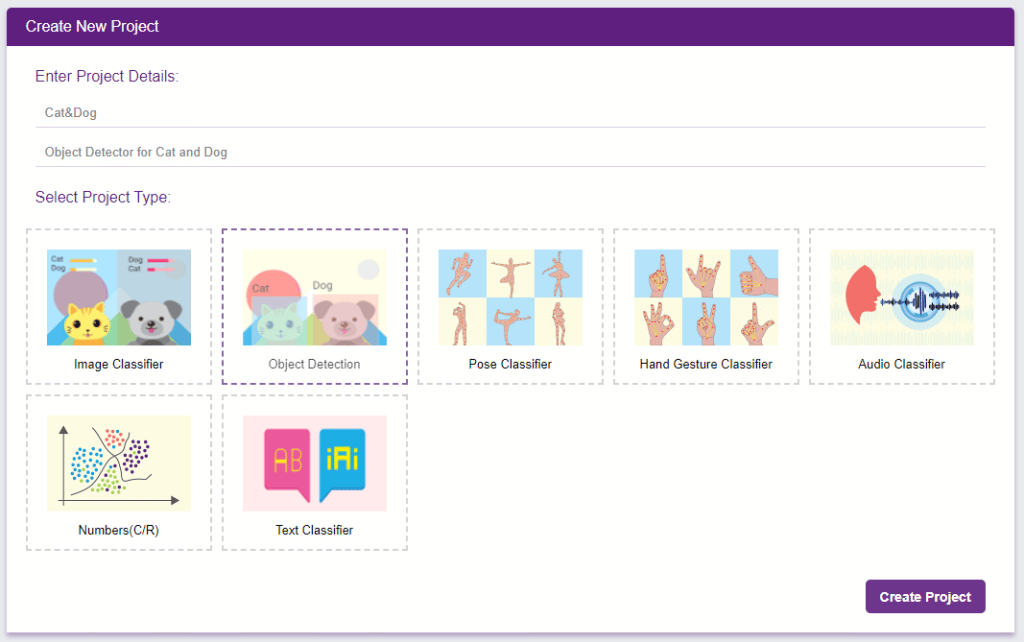

Click on “Create New Project“.

Click on “Create New Project“. - A window will open. Type in a project name of your choice and select the “Object Detection” extension. Click the “Create Project” button to open the Object Detection window.

- You shall see the Object Detection workflow with two classes already made for you. Your environment is all set. Now it’s time to upload the data.

Adding Data to Project

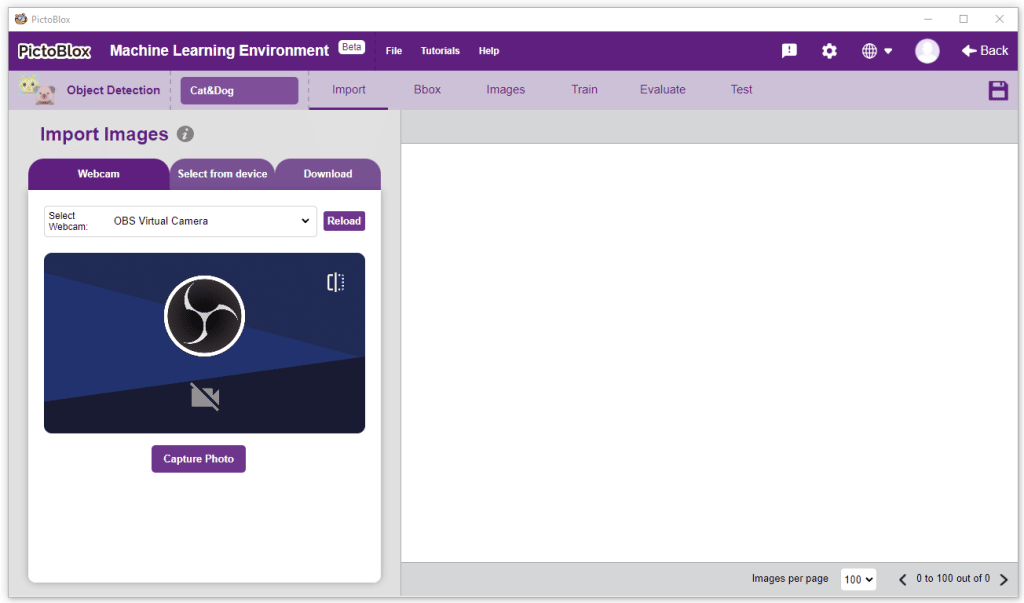

There are 3 ways to add the images to the project:

- Webcam: You can take photos from the camera directly using this option.

- File Upload: You can upload the images from the local file system.

- Downloading from PictoBlox Database: This gives you the option of downloading pre-annotated images and annotating images captured manually by the user.

The images imported from the Database are already labeled for training.

The images imported from the Database are already labeled for training.

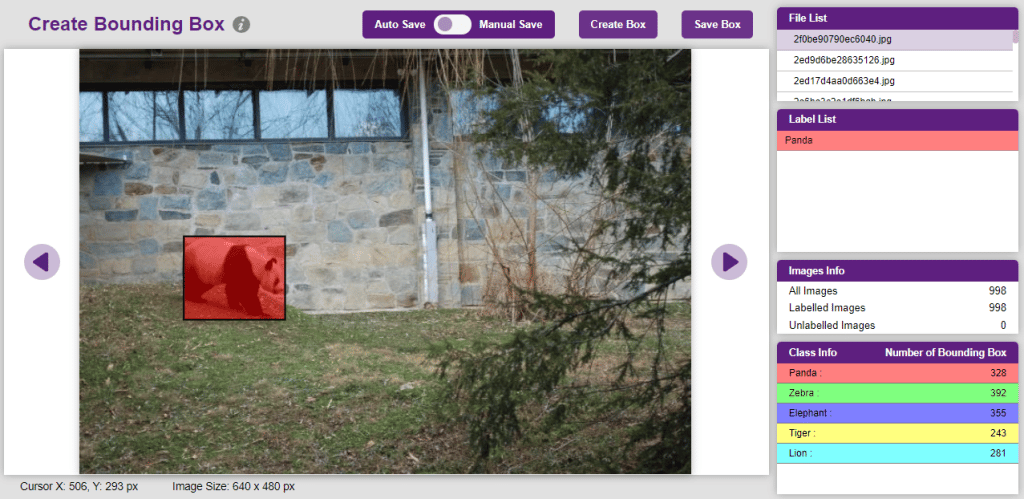

Bounding Box – Labelling Images

A bounding box is an imaginary rectangle that serves as a point of reference for object detection and creates a collision box for that object.

We draw these rectangles over images, outlining the object of interest within each image by defining its X and Y coordinates. This makes it easier for machine learning algorithms to find what they’re looking for, determine collision paths, and conserves valuable computing resources.

Object detection has two components: object classification and object localization. In other words, to detect an object in an image, the computer needs to know what it is and where it is.

Take self-driving cars as an example. An annotator will draw bounding boxes around other vehicles and label them. This helps train an algorithm to understand what vehicles look like. Annotating objects such as vehicles, traffic signals, and pedestrians makes it possible for autonomous vehicles to maneuver busy streets safely. Self-driving car perception models rely heavily on bounding boxes to make this possible.

Bounding boxes are used in all of these areas to train algorithms to identify patterns.

To create the bounding box in the images, click on the “Create Box” button, to create a bounding box. After the box is drawn, go to the “Label List” column and click on the edit button, and type in a name for the object under the bounding box. This name will become a class. Once you’ve entered the name, click on the tick mark to label the object.

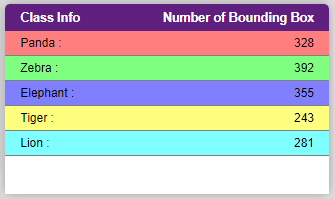

Once you’ve labeled an object, its count is updated in the “Class Info” column. You can simply click on the class to classify another object under that label.

Options in Bounding Box:

- Auto Save: This option allows you to auto-save the bounding boxes with the labels they are created. You do not need to save the images every time this option is enabled.

- Manual Save: This option disables the auto-saving of the bounding boxes. When this option is enabled you have to save the image before moving on to the next image for labeling.

- Create Box: This option starts the cursor on the images to create the bounding box. When the box is created, you can label it in the Label List.

- Save Box: This option saves all the bounding boxes created under the Label List.

- File List: It shows the list of images available for labeling in the project.

- Label List: It shows the list of Labels created for the selected image.

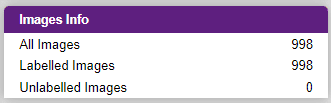

- Image Info: It shows the summary of the images – labeled and unlabelled images.

- Class Info: It shows the summary of the classes with the total number of bounding boxes created for each class.

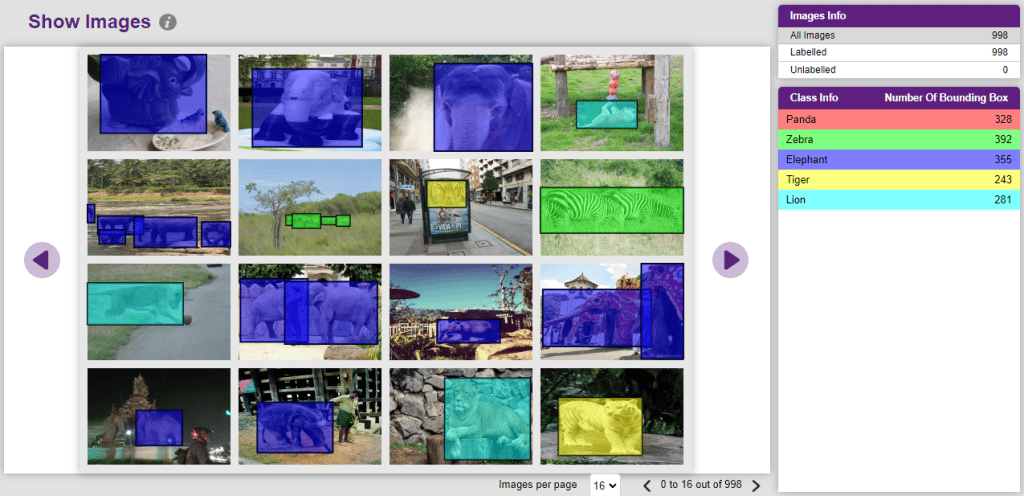

Analyzing Images

It is important that we analyze how the images are labeled. The Images tab allows you to analyze the images.

You can edit the images by clicking directly on the images.

Training the Model

In Object Detection, the model must locate and identify all the targets in the given image. This makes Object Detection a complex task to execute. Hence, the hyperparameters work differently in the Object Detection Extension.

Follow the process:

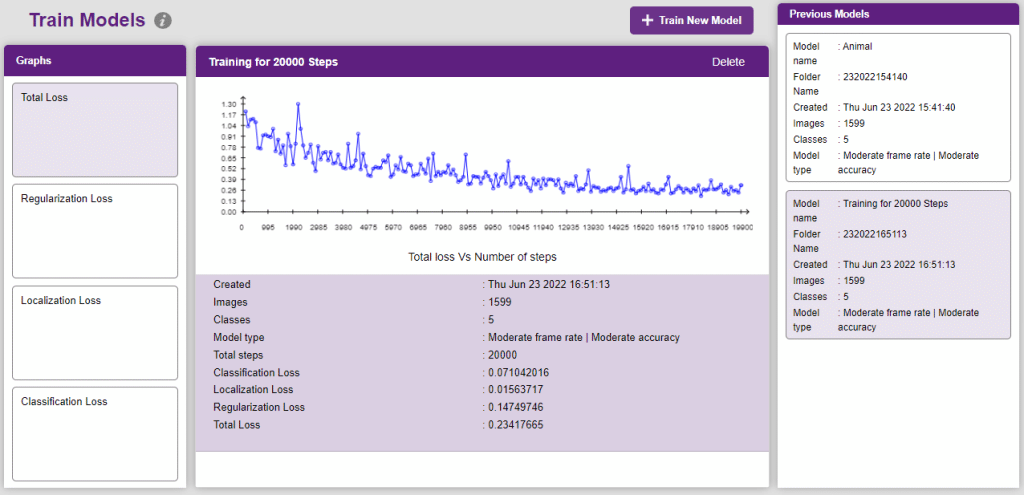

- Go to the “Train” tab. You should see the following screen:

- Click on the “Train New Model” button. Select the classes that need to be trained, and click on “Generate Dataset”. Once the dataset is generated, click “Next”.

- You shall see the training configurations. Observe the hyperparameters.

- Model name – The name of the model.

- Batch size – The number of training samples utilized in one iteration. The larger the batch size, the larger the RAM required.

- Number of iterations – The number of times your model will iterate through a batch of images.

- Number of layers – The number of layers in your model. Use more layers for large models.

Note: Hover your mouse over the question mark next to the hyperparameters to see their description.

Note: Hover your mouse over the question mark next to the hyperparameters to see their description.

- Specify your hyperparameters. If the numbers go out of range, PictoBlox will show a message. Click “Create”.

- Click “Start Training”. If desired performance is reached, click on the “Stop”.

Note: Training an Object Detection model is a time taking task. It might take a couple of hours to complete training.

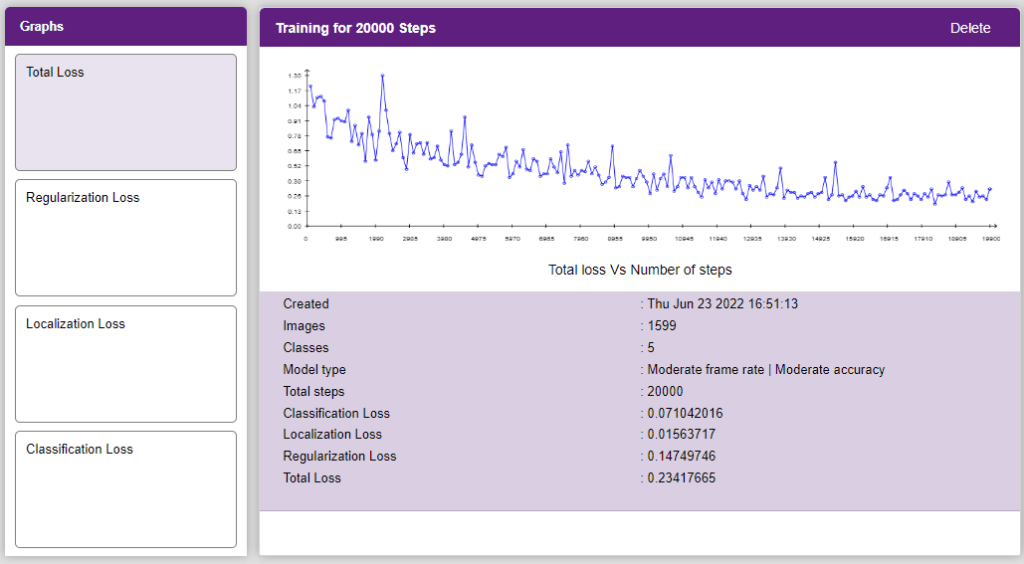

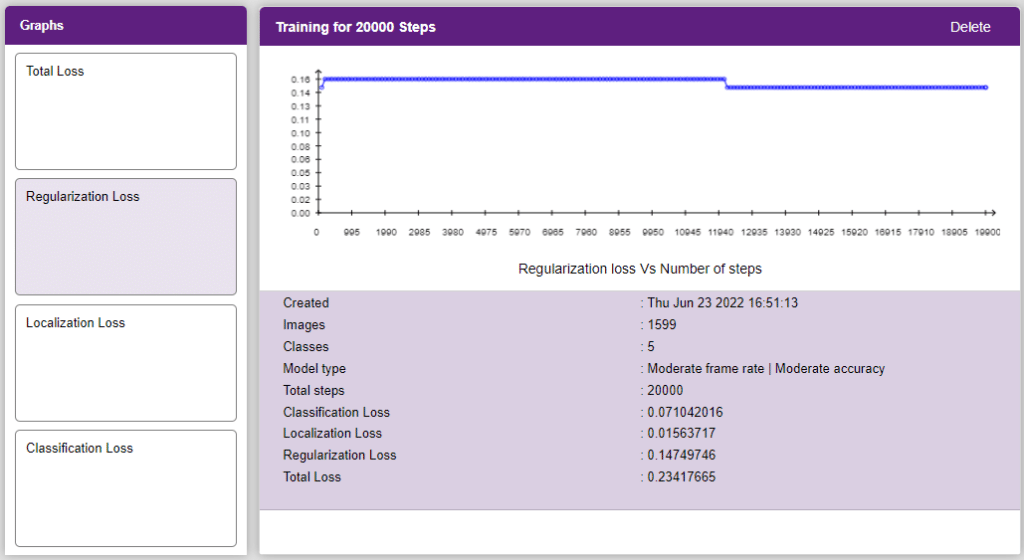

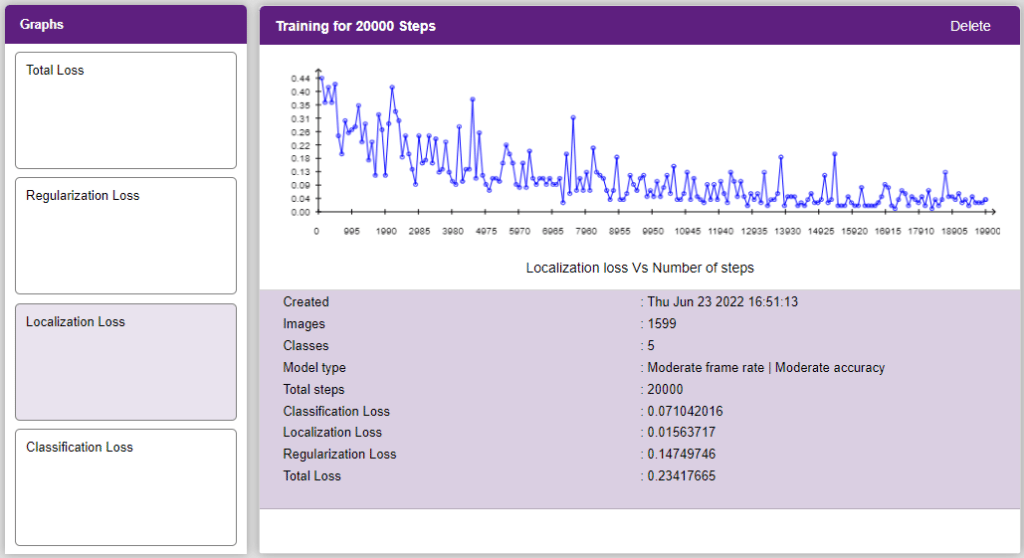

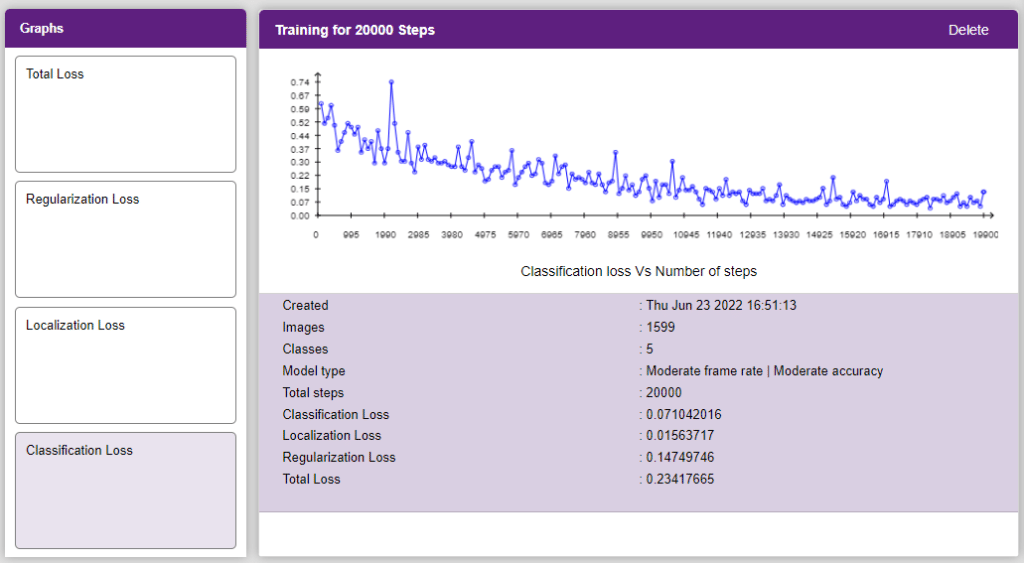

Note: Training an Object Detection model is a time taking task. It might take a couple of hours to complete training. - After the training is completed, you’ll see four loss graphs. You’ll be able to see the graphs under the “Graphs” panel. Click on the buttons to view the graph.

- Total Loss

- Regularization Loss

- Localization Loss

- Classification Loss

- Total Loss

Evaluating the Model

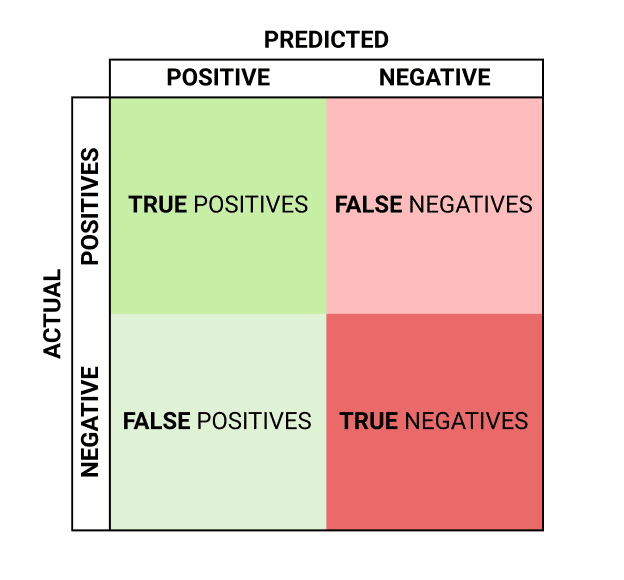

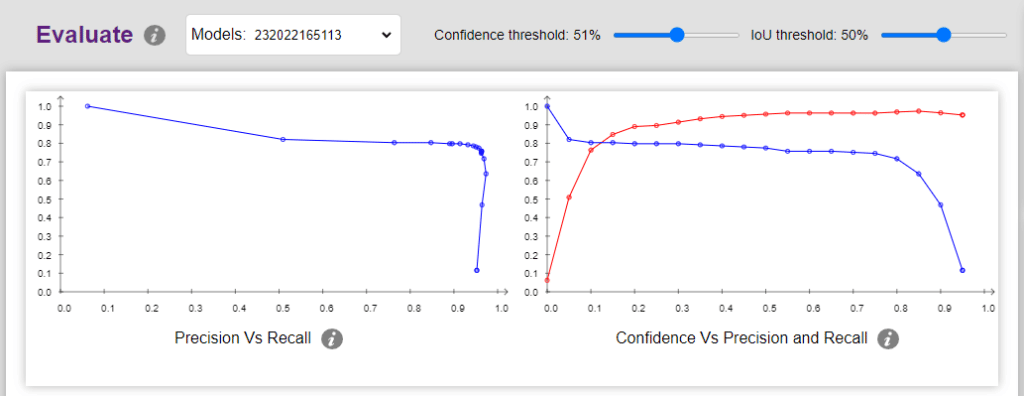

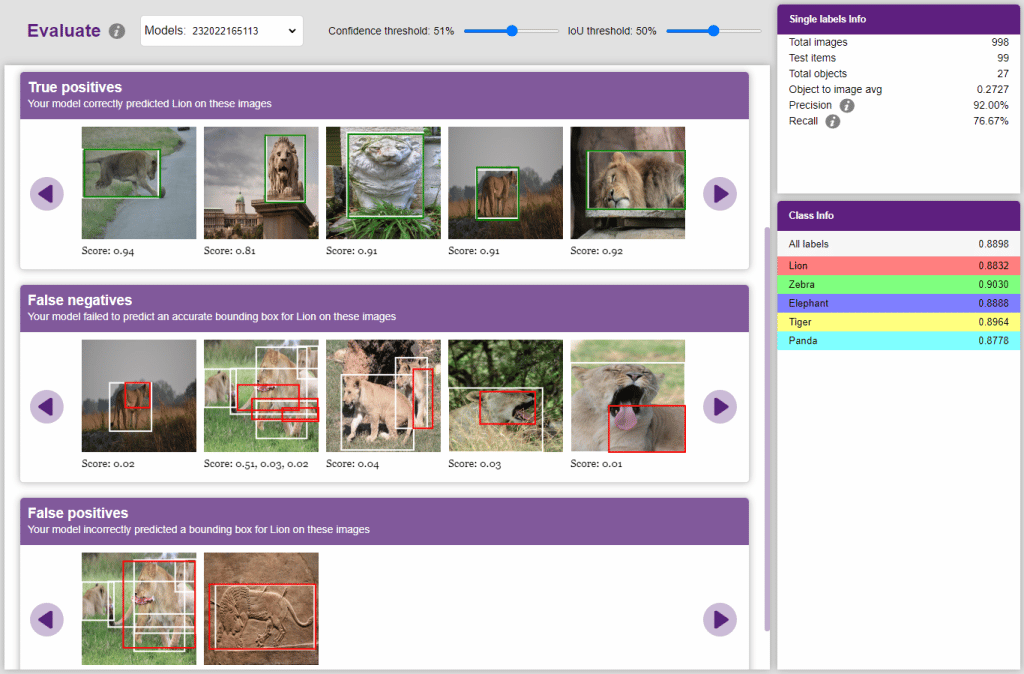

Now, let’s move to the “Evaluate” tab. You can view True Positives, False Negatives, and False Positives for each class here along with metrics like Precision and Recall.

- A true positive is an outcome where the model correctly predicts the positive class.

- A true negative is an outcome where the model correctly predicts the negative class.

- A false positive is an outcome where the model incorrectly predicts the positive class.

- A false negative is an outcome where the model incorrectly predicts the negative class.

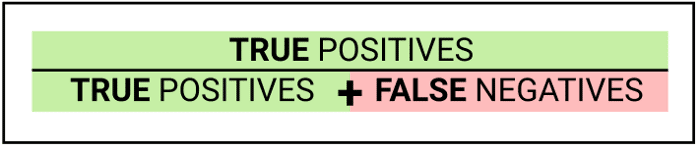

Precision and recall are two numbers that together are used to evaluate the performance of object detection.

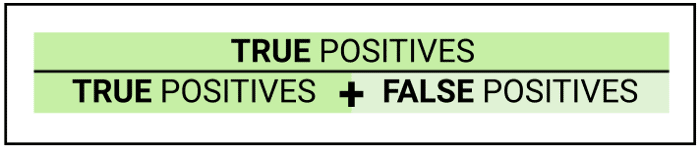

- Precision: Precision is calculated by dividing the true positives by anything that was predicted as a positive.

- Recall (or True Positive Rate) is calculated by dividing the true positives by anything that should have been predicted as positive.

A perfect model has precision and recalls both equal to 1.

You can visualize the Precision and Recall in the Evaluation graphs of the class or the whole model:

You can select the individual class and look at the performance of the class:

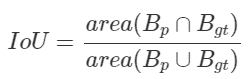

Confidence Threshold & IoU

- The confidence score is the probability that an anchor box contains an object. It is usually predicted by a classifier.

- Intersection over Union (IoU) is defined as the area of the intersection divided by the area of the union of a predicted bounding box and a ground-truth box:

Both confidence score and IoU are used as the criteria that determine whether detection is a true positive or a false positive.

- A detection is considered a true positive (TP) only if it satisfies three conditions:

- confidence score > threshold;

- the predicted class matches the class of a ground truth;

- the predicted bounding box has an IoU greater than a threshold (e.g., 0.5) with the ground truth.

- Violation of either of the latter two conditions makes a false positive (FP). In case multiple predictions correspond to the same ground truth, only the one with the highest confidence score counts as a true positive, while the remainings are considered false positives.

- When the confidence score of detection that is supposed to detect a ground truth is lower than the threshold, the detection counts as a false negative (FN).

- When the confidence score of detection that is not supposed to detect anything is lower than the threshold, the detection counts as a true negative (TN).

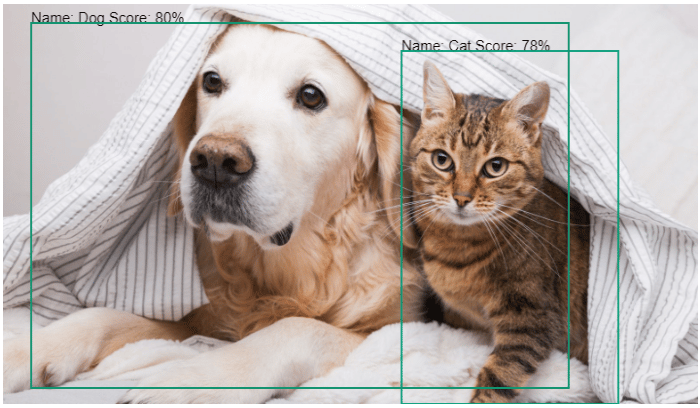

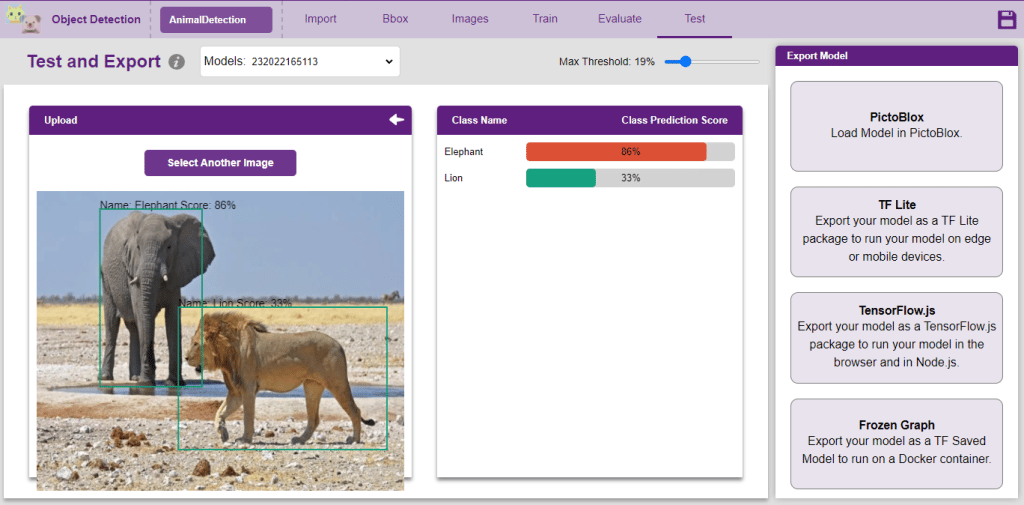

Testing the Model

The model can be tested with the following methods:

- Uploading Image:

- Webcam:

The model will return the probability of the input belonging to the classes.

The Model can be exported to 4 different forms:

- PictoBlox: The model can be exported to the blocks and functions which we will see further in the next section.

- TF Lite: It exports the model into the TF Lite package. TensorFlow Lite provides a set of tools that enables on-device machine learning by allowing developers to run their trained models on mobile, embedded, and IoT devices and computers. It supports platforms such as embedded Linux, Android, iOS, and MCU.

- TensorFlow.js: It exports the model into the TensorFlow.js package to run in the browser and in Node.js architecture.

- Frozen Graph: It exports the model as the TF Saved Model to run in a Python environment. A SavedModel contains a complete TensorFlow program, including trained parameters and computation. It does not require the original model building code to run.

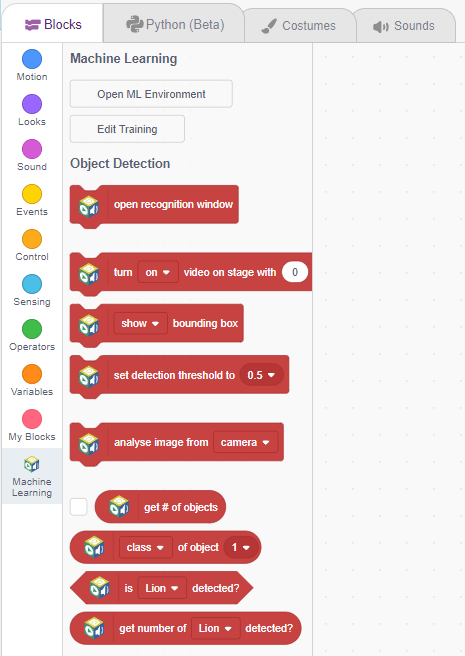

Export in Block Coding

Click on the “PictoBlox” button, and PictoBlox will load your model into the Block Coding Environment if you have opened the ML Environment in the Block Coding.

Export in Python Coding

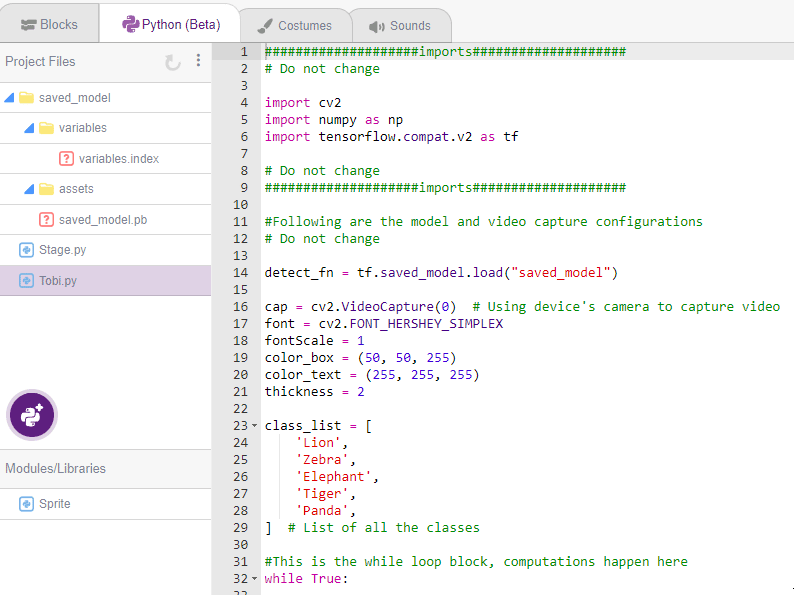

Click on the “PictoBlox” button, and PictoBlox will load your model into the Python Coding Environment if you have opened the ML Environment in Python Coding.

The following code appears in the Python Editor of the selected sprite.

####################imports####################

# Do not change

import cv2

import numpy as np

import tensorflow.compat.v2 as tf

# Do not change

####################imports####################

#Following are the model and video capture configurations

# Do not change

detect_fn = tf.saved_model.load("saved_model")

cap = cv2.VideoCapture(0) # Using device's camera to capture video

font = cv2.FONT_HERSHEY_SIMPLEX

fontScale = 1

color_box = (50, 50, 255)

color_text = (255, 255, 255)

thickness = 2

class_list = [

'Lion',

'Zebra',

'Elephant',

'Tiger',

'Panda',

] # List of all the classes

#This is the while loop block, computations happen here

while True:

ret, image_np = cap.read() # Read Frame

height, width, channels = image_np.shape # Get height, wdith

image_resized = cv2.resize(image_np,

(320, 320)) # Resize image to model input size

input_tensor = tf.convert_to_tensor(image_resized) # Convert image to tensor

input_tensor = input_tensor[tf.newaxis,

...] # Expanding the tensor dimensions

detections = detect_fn(input_tensor) #Pass image to model

num_detections = int(detections.pop('num_detections')) #Postprocessing

detections = {

key: value[0, :num_detections].numpy()

for key, value in detections.items()

}

detections['num_detections'] = num_detections

detections['detection_classes'] = detections['detection_classes'].astype(

np.int64)

# Draw recangle around detection object

for j in range(len(detections['detection_boxes'])):

# Set minimum threshold to 0.3

if (detections['detection_scores'][j] > 0.3):

# Starting and end point of detected object

starting_point = (int(detections['detection_boxes'][j][1] * width),

int(detections['detection_boxes'][j][0] * height))

end_point = (int(detections['detection_boxes'][j][3] * width),

int(detections['detection_boxes'][j][2] * height))

# Class name of detected object

className = class_list[detections['detection_classes'][j] - 1]

# Starting point of text

starting_point_text = (int(

detections['detection_boxes'][j][1] *

width), int(detections['detection_boxes'][j][0] * height) - 5)

# Draw rectangle and put text

image_np = cv2.rectangle(image_np, starting_point, end_point, color_box,

thickness)

image_np = cv2.putText(image_np, className, starting_point_text, font,

fontScale, color_text, thickness, cv2.LINE_AA)

# Show image in new window

cv2.imshow("Detection Window", image_np)

if cv2.waitKey(25) & 0xFF == ord(

'q'): # Press 'q' to close the classification window

break

cap.release() # Stops taking video input

cv2.destroyAllWindows() # Closes input window