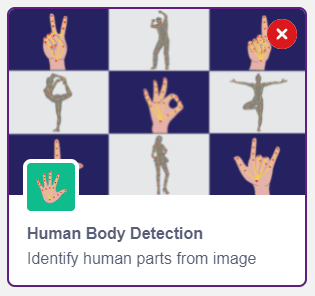

Human Body Detection

-

Available in: Block Coding, Python Coding

Available in: Block Coding, Python Coding

-

Mode: Stage Mode

Mode: Stage Mode

-

WiFi Required: No

WiFi Required: No

-

Compatible Hardware in Block Coding: evive, Quarky, Arduino Uno, Arduino Mega, Arduino Nano, ESP32, T-Watch, Boffin, micro:bit, TECbits, LEGO EV3, LEGO Boost, LEGO WeDo 2.0, Go DFA, None

Compatible Hardware in Block Coding: evive, Quarky, Arduino Uno, Arduino Mega, Arduino Nano, ESP32, T-Watch, Boffin, micro:bit, TECbits, LEGO EV3, LEGO Boost, LEGO WeDo 2.0, Go DFA, None

-

Compatible Hardware in Python: Quarky, None

Compatible Hardware in Python: Quarky, None

-

Object Declaration in Python: pose = Posenet()

Object Declaration in Python: pose = Posenet()

-

Extension Catergory: Artificial Intelligence

Extension Catergory: Artificial Intelligence

Introduction

Human body detection encompasses a range of technologies for detecting the presence of a human body in an area or space. Air conditioners that come with human detecting sensors and can detect people’s location to optimize comfort and energy-saving are one of the most common examples of this technology.

What this extension focuses on is Pose and Hand Pose detection. These algorithms help identify where the body joints are positioned.

Pose Detection

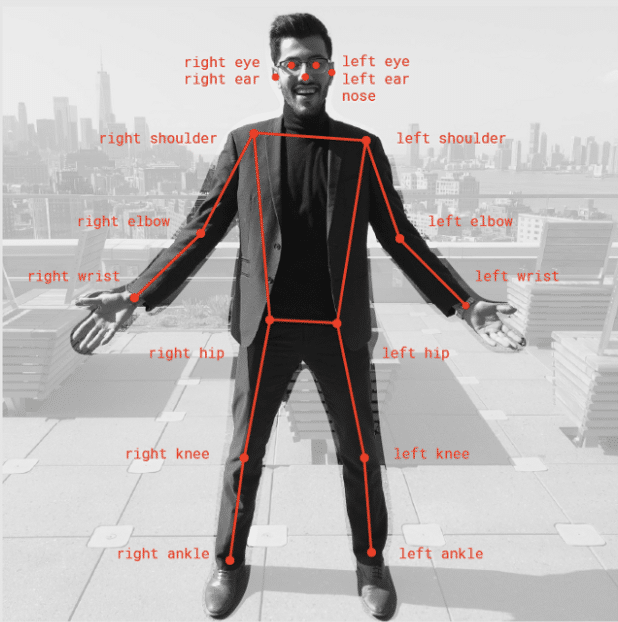

Pose Detection is a real-time pose detection technique with which you can detect human beings’ poses in Images. It allows you to estimate human pose by detecting body parts such as elbows, hips, wrists, knees, and ankles, and form a skeleton structure of your pose by joining these points.

Pictoblox makes use of the PoseNet model for Pose Detection.

PoseNet gives us a total of 17 key points that we can use, right from our eyes to ears to knees and ankles. If the Image we give to PoseNet is not clear the PoseNet displays a confidence score of how much it is confident in detecting a particular pose as well.

Applications of Pose Detection

- Used in Snapchat filters, that can see the tongue, aspects, glimpse, dummy faces.

- Fitness apps like a cult which uses to detect your exercise poses.

- Instagram Reels uses posture detection to provide you with different features to apply to your face and surrounding.

- Virtual Games to analyze shots of players.

Hand Detection

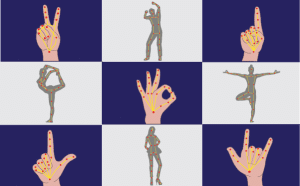

This works in a similar fashion as the Pose Detection algorithm, with much more accurate tracking of the points on the hand. Pictoblox uses the MediaPipe model to detect Hands and Gestures. It predicts 21 3D hand keypoints per detected hand.

Use cases of Hand Detection

![]()

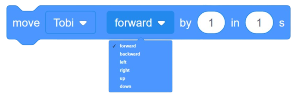

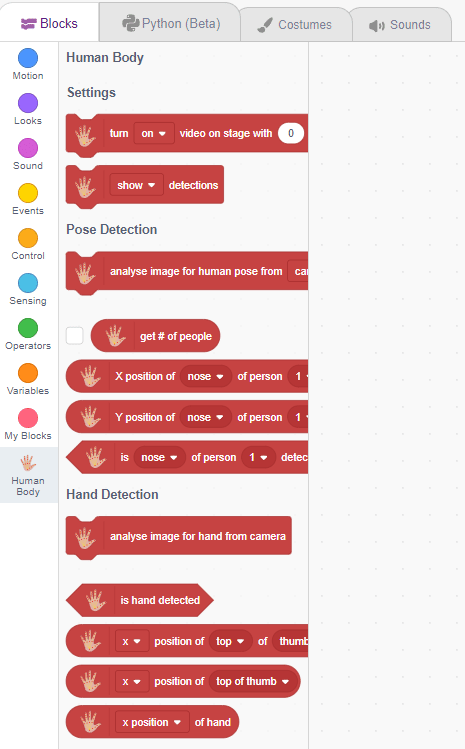

Using the Human Body Detection Extension of PictoBlox, you can analyze an image input from a camera or the stage. Blocks available under this extension will help:

- Get the pose of a human body from an image.

- Get the number of people in an image.

- Get the x and y coordinates of body features like shoulder, nose, mouth, etc.

- Detect hands in an image.

- Get the x and y coordinates of hands and different fingers.

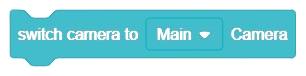

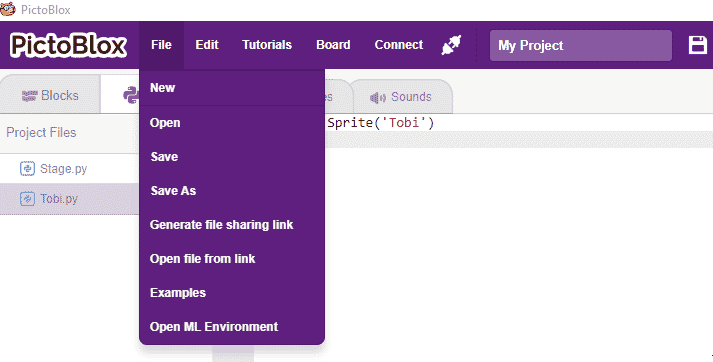

Accessing Human Body Detection in Block Coding

Following is the process to add Human Body Detection capability to the PictoBlox Project.

- Open PictoBlox and create a new file.

- Select the coding environment as Block Coding.

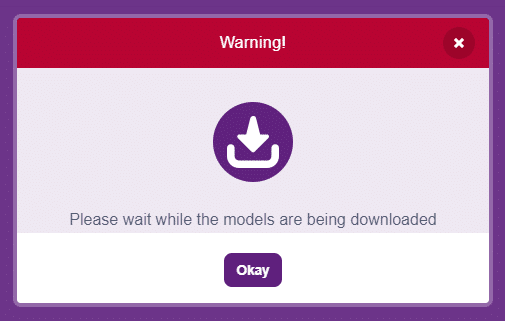

- Next, click on the Add Extension button and add the Human Body Detection extension.

- The face detection models will be downloaded, which may take a few seconds to load.

- Once downloaded you will get a message saying “Model loaded successfully”.

- You can find the face detection blocks available in the project.

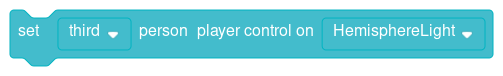

Accessing Human Body Detection in Python Coding

Following is the process to add Human Body Detection capability to the PictoBlox Project.

- Open PictoBlox and create a new file.

- Select the coding environment as Python Coding.

- Next, click on the Add Modules/Libraries button and add the Human Body Detection extension.

- To access the library functions, you have to add the object declaration.

pose = Posenet()